쿠버네티스란?

Kubernetes 클러스터는 안정적이고 확장 가능한 방식으로 컨테이너화된 애플리케이션을 호스팅합니다. DevOps를 염두에 두고 Kubernetes는 업그레이드와 같은 유지 관리 작업을 매우 간단하게 만듭니다.

MicroK8s란?

MicroK8s 는 워크스테이션이나 개인 장치에서 실행하기 위한 용도로 사용되며 K8s의 경량화 버전이라고 보면 쉽습니다. MicroK8s는 실제 쿠버네티스처럼 타 컴퓨터와도 연결이 가능하며 K8s에서 사용하는 대부분의 기능을 지원합니다. Linux Ubuntu는 MicroK8s와 호환성이 가장 좋은 OS이며 설치 또한 어렵지 않습니다.

테스트 환경

Welcome to Ubuntu 20.04.5 LTS (GNU/Linux 5.15.0-1025-gcp x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/advantage

System information as of Mon Dec 5 11:51:12 UTC 2022

System load: 0.39 Processes: 107

Usage of /: 19.1% of 9.51GB Users logged in: 0

Memory usage: 24% IPv4 address for ens4: 10.178.0.3

Swap usage: 0%

0 updates can be applied immediately.

The programs included with the Ubuntu system are free software;

the exact distribution terms for each program are described in the

individual files in /usr/share/doc/*/copyright.

Ubuntu comes with ABSOLUTELY NO WARRANTY, to the extent permitted by

applicable law.Microk8s

Microk8s 설치

설치 코드

$ sudo snap install microk8s --classic실행 결과

$ sudo snap install microk8s --classic

microk8s (1.25/stable) v1.25.4 from Canonical✓ installed방화벽 설정

sudo ufw allow in on cni0 && sudo ufw allow out on cni0

sudo ufw default allow routed여러개의 Pod으로 구성시 아래 코드를 통해 방화벽 상태를 변경해주어야 한다.

방화벽 설정을 변경해야 Pod끼리 통신이 가능해진다.

에드온 설정

에드온 활성/비활성 코드

쿠버네티스에서 사용할 수 있는 전용 에드온이 있다.

에드온은 아래 코드로 실행 및 중지 할 수 있다.

microk8s enable dns dashboard storagemicrok8s disable dns dashboard storage위 코드를 입력 하면 dns 및 대시보드 애드온을 활성화했으므로 해당 기능에 액세스할 수 있습니다.

에드온 실행결과

$ sudo microk8s enable dns dashboard storage

Infer repository core for addon dns

Infer repository core for addon dashboard

Infer repository core for addon storage

Enabling DNS

Applying manifest

serviceaccount/coredns created

configmap/coredns created

deployment.apps/coredns created

service/kube-dns created

clusterrole.rbac.authorization.k8s.io/coredns created

clusterrolebinding.rbac.authorization.k8s.io/coredns created

Restarting kubelet

DNS is enabled

Enabling Kubernetes Dashboard

Infer repository core for addon metrics-server

Addon core/metrics-server is already enabled

Applying manifest

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

secret/microk8s-dashboard-token unchanged

If RBAC is not enabled access the dashboard using the token retrieved with:

microk8s kubectl describe secret -n kube-system microk8s-dashboard-token

Use this token in the https login UI of the kubernetes-dashboard service.

In an RBAC enabled setup (microk8s enable RBAC) you need to create a user with restricted

permissions as shown in:

https://github.com/kubernetes/dashboard/blob/master/docs/user/access-control/creating-sample-user.md

DEPRECIATION WARNING: 'storage' is deprecated and will soon be removed. Please use 'hostpath-storage' instead.

Infer repository core for addon hostpath-storage

Enabling default storage class.

WARNING: Hostpath storage is not suitable for production environments.

deployment.apps/hostpath-provisioner created

storageclass.storage.k8s.io/microk8s-hostpath created

serviceaccount/microk8s-hostpath created

clusterrole.rbac.authorization.k8s.io/microk8s-hostpath created

clusterrolebinding.rbac.authorization.k8s.io/microk8s-hostpath created

Storage will be available soon.$ sudo microk8s disable dns dashboard storage

Infer repository core for addon dns

Infer repository core for addon dashboard

Infer repository core for addon storage

Disabling DNS

Reconfiguring kubelet

Removing DNS manifest

deployment.apps "coredns" deleted

pod/coredns-d489fb88-rm852 condition met

serviceaccount "coredns" deleted

configmap "coredns" deleted

service "kube-dns" deleted

clusterrole.rbac.authorization.k8s.io "coredns" deleted

clusterrolebinding.rbac.authorization.k8s.io "coredns" deleted

DNS is disabled

Disabling Dashboard

serviceaccount "kubernetes-dashboard" deleted

service "kubernetes-dashboard" deleted

secret "kubernetes-dashboard-certs" deleted

secret "kubernetes-dashboard-csrf" deleted

secret "kubernetes-dashboard-key-holder" deleted

configmap "kubernetes-dashboard-settings" deleted

role.rbac.authorization.k8s.io "kubernetes-dashboard" deleted

clusterrole.rbac.authorization.k8s.io "kubernetes-dashboard" deleted

rolebinding.rbac.authorization.k8s.io "kubernetes-dashboard" deleted

clusterrolebinding.rbac.authorization.k8s.io "kubernetes-dashboard" deleted

deployment.apps "kubernetes-dashboard" deleted

service "dashboard-metrics-scraper" deleted

deployment.apps "dashboard-metrics-scraper" deleted

Dashboard is disabled

DEPRECIATION WARNING: 'storage' is deprecated and will soon be removed. Please use 'hostpath-storage' instead.

Infer repository core for addon hostpath-storage

Disabling hostpath storage.

deployment.apps "hostpath-provisioner" deleted

storageclass.storage.k8s.io "microk8s-hostpath" deleted

serviceaccount "microk8s-hostpath" deleted

clusterrole.rbac.authorization.k8s.io "microk8s-hostpath" deleted

clusterrolebinding.rbac.authorization.k8s.io "microk8s-hostpath" deleted

Storage removed.

Remove PVC storage at /var/snap/microk8s/common/default-storage ? (Y/N):에드온 종류

- dns: Deploy DNS. This addon may be required by others, thus we recommend you always enable it.

- dashboard: Deploy kubernetes dashboard.

- storage: Create a default storage class. This storage class makes use of the hostpath-provisioner pointing to a directory on the host.

- ingress: Create an ingress controller.

- gpu: Expose GPU(s) to MicroK8s by enabling the nvidia-docker runtime and nvidia-device-plugin-daemonset. Requires NVIDIA drivers to be already installed on the host system.

- istio: Deploy the core Istio services. You can use the microk8s istioctl command to manage your deployments.

- registry: Deploy a docker private registry and expose it on localhost:32000. The storage addon will be enabled as part of this addon.

Microk8s 상태 확인

상태 확인 코드

sudo microk8s.status상태 확인 결과

$ sudo microk8s.status

microk8s is running

high-availability: no

datastore master nodes: 127.0.0.1:19001

datastore standby nodes: none

addons:

enabled:

dashboard # (core) The Kubernetes dashboard

dns # (core) CoreDNS

ha-cluster # (core) Configure high availability on the current node

helm # (core) Helm - the package manager for Kubernetes

helm3 # (core) Helm 3 - the package manager for Kubernetes

hostpath-storage # (core) Storage class; allocates storage from host directory

metrics-server # (core) K8s Metrics Server for API access to service metrics

storage # (core) Alias to hostpath-storage add-on, deprecated

disabled:

cert-manager # (core) Cloud native certificate management

community # (core) The community addons repository

gpu # (core) Automatic enablement of Nvidia CUDA

host-access # (core) Allow Pods connecting to Host services smoothly

ingress # (core) Ingress controller for external access

kube-ovn # (core) An advanced network fabric for Kubernetes

mayastor # (core) OpenEBS MayaStor

metallb # (core) Loadbalancer for your Kubernetes cluster

observability # (core) A lightweight observability stack for logs, traces and metrics

prometheus # (core) Prometheus operator for monitoring and logging

rbac # (core) Role-Based Access Control for authorisation

registry # (core) Private image registry exposed on localhost:32000Microk8s 동작 점검

동작 점검 코드

$ sudo microk8s.inspect동작 점검 결과

$ sudo microk8s.inspect

Inspecting system

Inspecting Certificates

Inspecting services

Service snap.microk8s.daemon-cluster-agent is running

Service snap.microk8s.daemon-containerd is running

Service snap.microk8s.daemon-kubelite is running

Service snap.microk8s.daemon-k8s-dqlite is running

Service snap.microk8s.daemon-apiserver-kicker is running

Copy service arguments to the final report tarball

Inspecting AppArmor configuration

Gathering system information

Copy processes list to the final report tarball

Copy disk usage information to the final report tarball

Copy memory usage information to the final report tarball

Copy server uptime to the final report tarball

Copy openSSL information to the final report tarball

Copy snap list to the final report tarball

Copy VM name (or none) to the final report tarball

Copy current linux distribution to the final report tarball

Copy network configuration to the final report tarball

Inspecting kubernetes cluster

Inspect kubernetes cluster

Inspecting dqlite

Inspect dqlite

WARNING: The memory cgroup is not enabled.

The cluster may not be functioning properly. Please ensure cgroups are enabled

See for example: https://microk8s.io/docs/install-alternatives#heading--arm

Building the report tarball

Report tarball is at /var/snap/microk8s/4221/inspection-report-20221205_163431.tar.gz쿠버네티스에서 Namespaces 기준으로 정보 불러오기

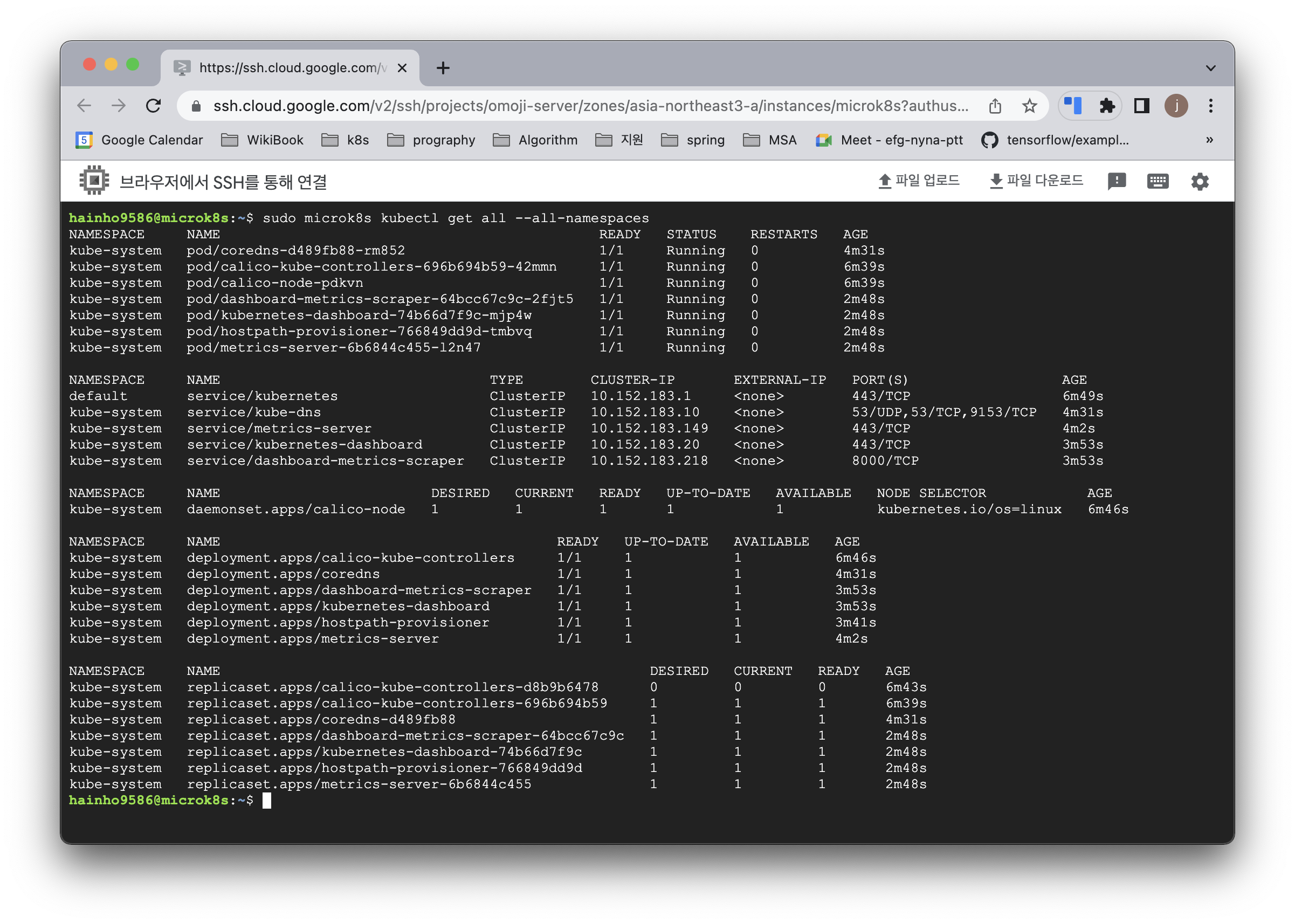

실행코드

sudo microk8s kubectl get all --all-namespaces결과

$ sudo microk8s kubectl get all --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system pod/calico-kube-controllers-696b694b59-42mmn 1/1 Running 0 34m

kube-system pod/metrics-server-6b6844c455-l2n47 1/1 Running 0 31m

kube-system pod/calico-node-pdkvn 1/1 Running 0 34m

kube-system pod/hostpath-provisioner-7d66c5478c-ptj9r 1/1 Running 0 19m

kube-system pod/dashboard-metrics-scraper-64bcc67c9c-cwd69 1/1 Running 0 19m

kube-system pod/coredns-d489fb88-8pjxx 1/1 Running 0 19m

kube-system pod/kubernetes-dashboard-74b66d7f9c-rz7jn 1/1 Running 0 19m

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default service/kubernetes ClusterIP 10.152.183.1 <none> 443/TCP 35m

kube-system service/metrics-server ClusterIP 10.152.183.149 <none> 443/TCP 32m

kube-system service/kube-dns ClusterIP 10.152.183.10 <none> 53/UDP,53/TCP,9153/TCP 21m

kube-system service/kubernetes-dashboard ClusterIP 10.152.183.193 <none> 443/TCP 20m

kube-system service/dashboard-metrics-scraper ClusterIP 10.152.183.231 <none> 8000/TCP 20m

NAMESPACE NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

kube-system daemonset.apps/calico-node 1 1 1 1 1 kubernetes.io/os=linux 35m

NAMESPACE NAME READY UP-TO-DATE AVAILABLE AGE

kube-system deployment.apps/calico-kube-controllers 1/1 1 1 35m

kube-system deployment.apps/metrics-server 1/1 1 1 32m

kube-system deployment.apps/hostpath-provisioner 1/1 1 1 20m

kube-system deployment.apps/dashboard-metrics-scraper 1/1 1 1 20m

kube-system deployment.apps/coredns 1/1 1 1 21m

kube-system deployment.apps/kubernetes-dashboard 1/1 1 1 20m

NAMESPACE NAME DESIRED CURRENT READY AGE

kube-system replicaset.apps/calico-kube-controllers-d8b9b6478 0 0 0 34m

kube-system replicaset.apps/calico-kube-controllers-696b694b59 1 1 1 34m

kube-system replicaset.apps/metrics-server-6b6844c455 1 1 1 31m

kube-system replicaset.apps/hostpath-provisioner-7d66c5478c 1 1 1 19m

kube-system replicaset.apps/dashboard-metrics-scraper-64bcc67c9c 1 1 1 19m

kube-system replicaset.apps/coredns-d489fb88 1 1 1 19m

kube-system replicaset.apps/kubernetes-dashboard-74b66d7f9c 1 1 1 19m쿠버네티스 namespaces 목록가져오기

실행 코드

$ kubectl get namespaces실행 결과

$ sudo microk8s kubectl get namespaces

NAME STATUS AGE

kube-system Active 60m

kube-public Active 60m

kube-node-lease Active 60m

default Active 60m쿠버네티스 kube-system namespaces의 pod 목록가져오기

실행 코드

$ kubectl get pods -n kube-system실행 결과

$ sudo microk8s kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-696b694b59-42mmn 1/1 Running 0 60m

metrics-server-6b6844c455-l2n47 1/1 Running 0 57m

calico-node-pdkvn 1/1 Running 0 60m

hostpath-provisioner-7d66c5478c-ptj9r 1/1 Running 0 45m

dashboard-metrics-scraper-64bcc67c9c-cwd69 1/1 Running 0 45m

coredns-d489fb88-8pjxx 1/1 Running 0 45m

kubernetes-dashboard-74b66d7f9c-rz7jn 1/1 Running 0 45m쿠버네티스 kube-system namespaces의 replicaset 목록가져오기

실행 코드

$ kubectl get replicasets -n kube-system실행 결과

$ sudo microk8s kubectl get replicasets -n kube-system

NAME DESIRED CURRENT READY AGE

calico-kube-controllers-d8b9b6478 0 0 0 61m

calico-kube-controllers-696b694b59 1 1 1 61m

metrics-server-6b6844c455 1 1 1 57m

hostpath-provisioner-7d66c5478c 1 1 1 46m

dashboard-metrics-scraper-64bcc67c9c 1 1 1 46m

coredns-d489fb88 1 1 1 46m

kubernetes-dashboard-74b66d7f9c 1 1 1 46m쿠버네티스 kube-system namespaces의 deployment 목록가져오기

실행 코드

$ kubectl get deployments -n kube-system실행 결과

$ sudo microk8s kubectl get deployments -n kube-system

NAME READY UP-TO-DATE AVAILABLE AGE

calico-kube-controllers 1/1 1 1 61m

metrics-server 1/1 1 1 58m

hostpath-provisioner 1/1 1 1 47m

dashboard-metrics-scraper 1/1 1 1 47m

coredns 1/1 1 1 47m

kubernetes-dashboard 1/1 1 1 47m쿠버네티스 kube-system namespaces의 service 목록가져오기

실행 코드

$ kubectl get services -n kube-system실행 결과

$ sudo microk8s kubectl get services -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

metrics-server ClusterIP 10.152.183.149 <none> 443/TCP 59m

kube-dns ClusterIP 10.152.183.10 <none> 53/UDP,53/TCP,9153/TCP 48m

kubernetes-dashboard ClusterIP 10.152.183.193 <none> 443/TCP 47m

dashboard-metrics-scraper ClusterIP 10.152.183.231 <none> 8000/TCP 47m실행 코드

$ sudo microk8s kubectl run mydeployment --image=alicek106/conposetest:balanced_web -- replicas=3 --port=80실행 결과

$ sudo microk8s kubectl run mydeployment --image=alicek106/conposetest:balanced_web -- replicas=3 --port=80

pod/mydeployment created쿠버네티스 Deployment 조회하기

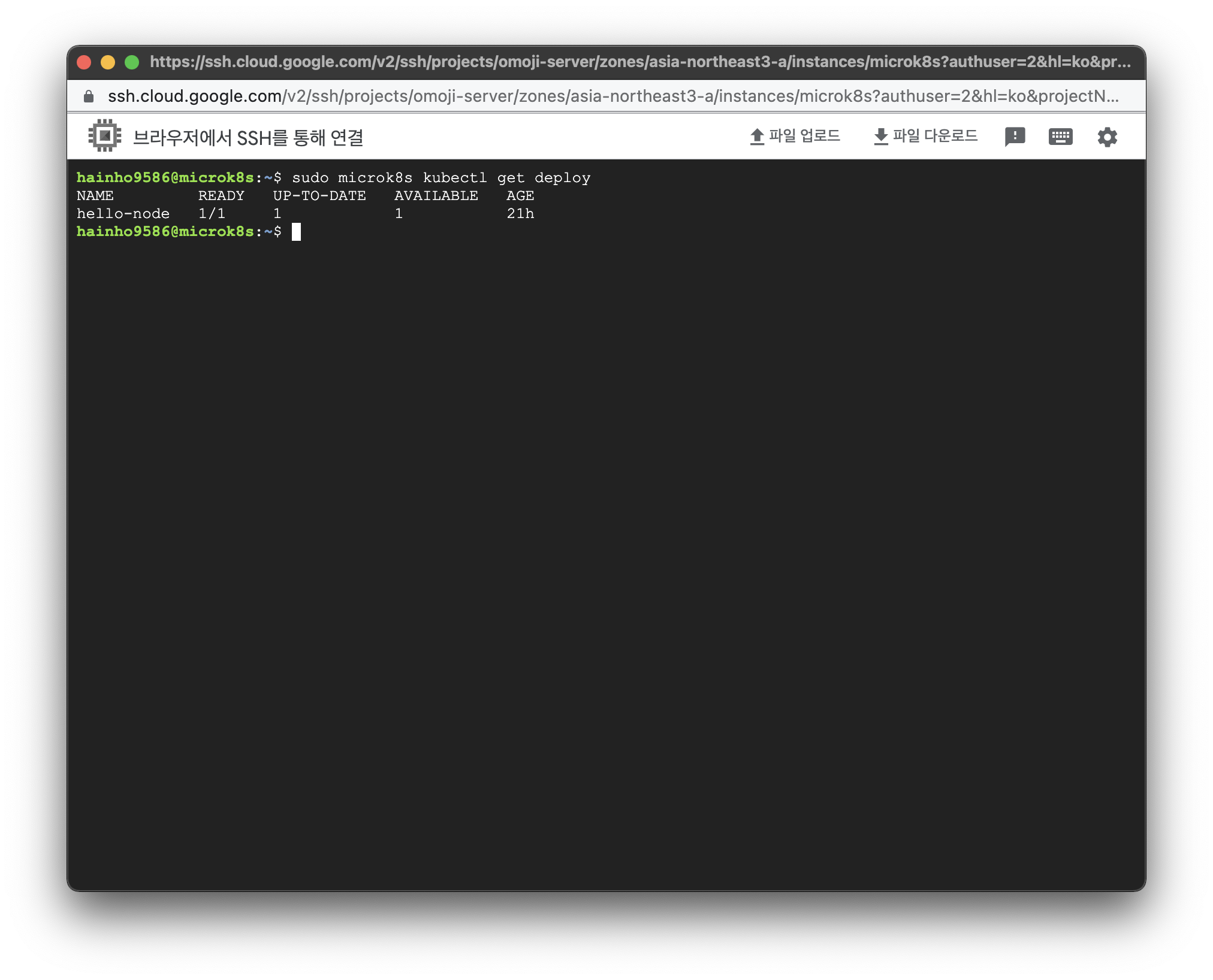

실행코드

$ sudo microk8s kubectl get deploy실행결과

$ sudo microk8s kubectl get deploy

NAME READY UP-TO-DATE AVAILABLE AGE

hello-node 1/1 1 1 21h쿠버네티스 ReplicaSet 조회

실행코드

$ sudo microk8s kubectl get rs실행결과

$ sudo microk8s kubectl get rs

NAME DESIRED CURRENT READY AGE

hello-node-697897c86 1 1 1 21h쿠버네티스 Pod 조회

실행코드

$ sudo microk8s kubectl get po실행결과

$ sudo microk8s kubectl get po

NAME READY STATUS RESTARTS AGE

hello-node-697897c86-fk6dx 1/1 Running 0 21h쿠버네티스 Deployment를 외부로 노출시키는 서비스 오브젝트를 생성

실행코드

$ sudo microk8s kubectl expose deployment 이름 --type=LoadBalancer --port=8080실행결과

$ sudo microk8s kubectl expose deployment hello-node --type=LoadBalancer --port=8080

service/hello-node exposed쿠버네티스 Service 정보 확인

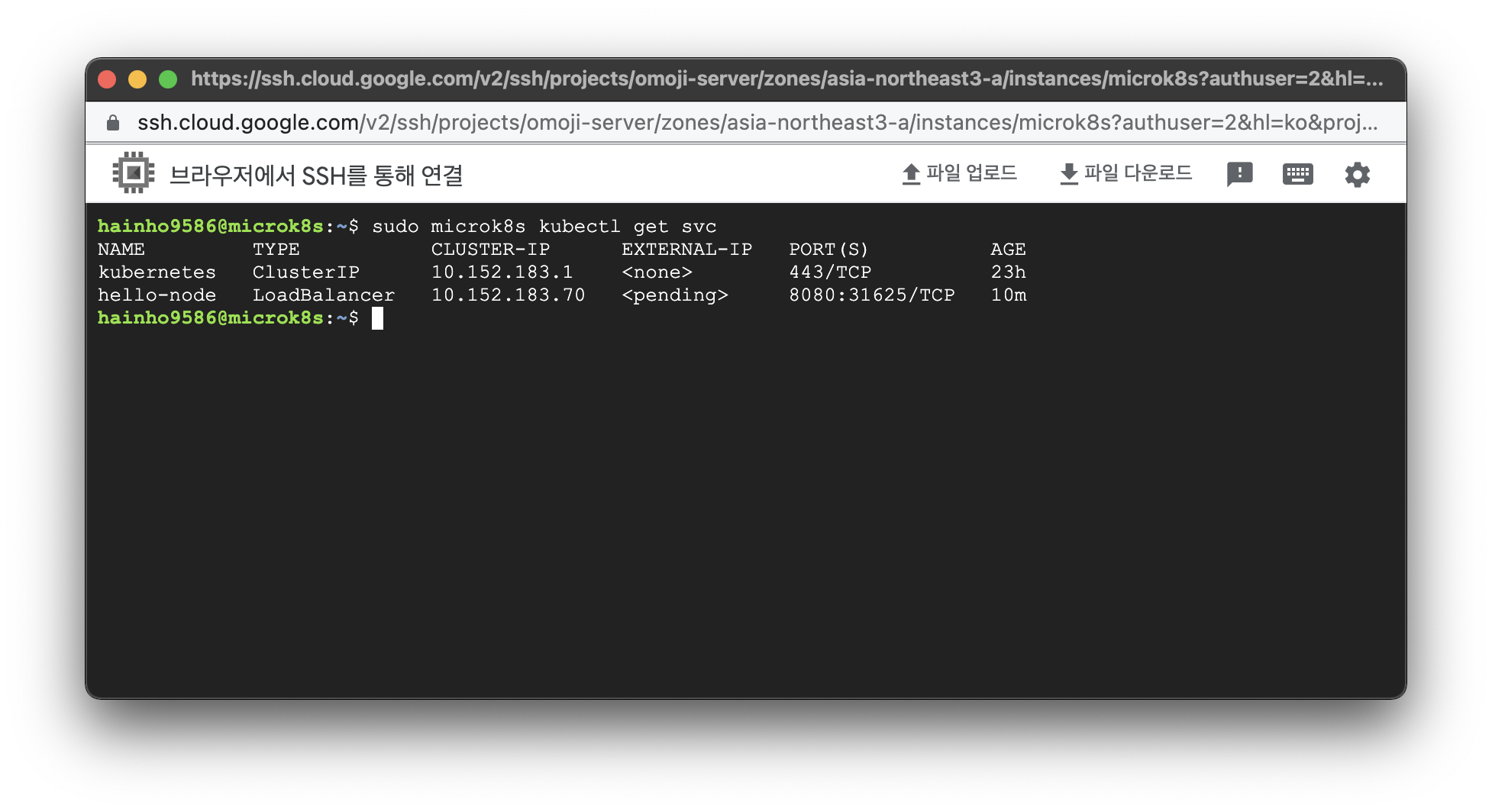

실행코드

$ sudo microk8s kubectl get svc실행결과

$ sudo microk8s kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.152.183.1 <none> 443/TCP 23h

hello-node LoadBalancer 10.152.183.70 <pending> 8080:31625/TCP 10m쿠버네티스 Service 자세한 정보 확인

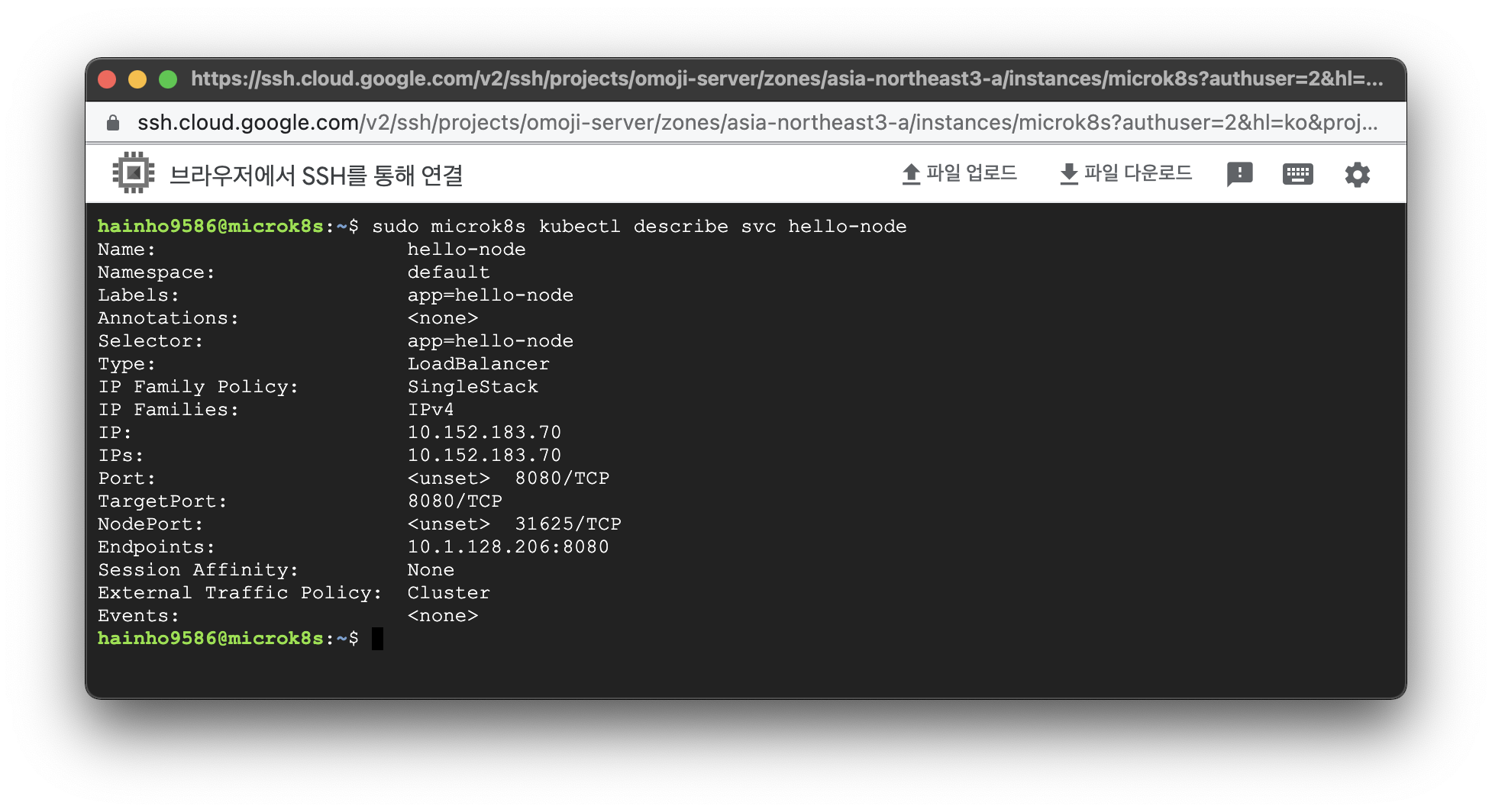

실행코드

$ sudo microk8s kubectl describe svc 서비스명실행 결과

$ sudo microk8s kubectl describe svc hello-node

Name: hello-node

Namespace: default

Labels: app=hello-node

Annotations: <none>

Selector: app=hello-node

Type: LoadBalancer

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.152.183.70

IPs: 10.152.183.70

Port: <unset> 8080/TCP

TargetPort: 8080/TCP

NodePort: <unset> 31625/TCP

Endpoints: 10.1.128.206:8080

Session Affinity: None

External Traffic Policy: Cluster

Events: <none>쿠버네티스 노드 조회

실행코드

$ sudo microk8s kubectl get nodes실행결과

$ sudo microk8s kubectl get nodes

NAME STATUS ROLES AGE VERSION

microk8s Ready <none> 24h v1.25.4쿠버네티스 Deployment 생성

실행코드

$ sudo microk8s kubectl create deployment 이름 --image=이미지파일실행결과

$ sudo microk8s kubectl create deployment kubernetes-bootcamp --image=gcr.io/google-samples/kubernetes-bootcamp:v1

deployment.apps/kubernetes-bootcamp created쿠버네티스 상태 조회

Deployments 조회 코드

$ sudo microk8s kubectl get deploymentsDeployments 조회 결과

$ sudo microk8s kubectl get deployments

NAME READY UP-TO-DATE AVAILABLE AGE

hello-node 1/1 1 1 22h

kubernetes-bootcamp 1/1 1 1 2m48sReplicasets 조회 코드

$ sudo microk8s kubectl get replicasetsReplicasets 조회 결과

$ sudo microk8s kubectl get replicasets

NAME DESIRED CURRENT READY AGE

hello-node-697897c86 1 1 1 22h

kubernetes-bootcamp-75c5d958ff 1 1 1 2m51sPods 조회 코드

$ sudo microk8s kubectl get podsPods 조회 결과

$ sudo microk8s kubectl get pods

NAME READY STATUS RESTARTS AGE

hello-node-697897c86-fk6dx 1/1 Running 0 22h

kubernetes-bootcamp-75c5d958ff-j4fvb 1/1 Running 0 2m56sServices 조회 코드

$ sudo microk8s kubectl get servicesServices 조회 결과

$ sudo microk8s kubectl get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.152.183.1 <none> 443/TCP 24h

hello-node LoadBalancer 10.152.183.70 <pending> 8080:31625/TCP 69mPod 상세 조회

Pod 상세 조회 코드

$ sudo microk8s kubectl describe podsPod 상세 조회 결과

$ sudo microk8s kubectl describe pods

Name: kubernetes-bootcamp-75c5d958ff-j4fvb

Namespace: default

Priority: 0

Service Account: default

Node: microk8s/10.178.0.5

Start Time: Tue, 06 Dec 2022 16:18:42 +0000

Labels: app=kubernetes-bootcamp

pod-template-hash=75c5d958ff

Annotations: cni.projectcalico.org/containerID: f1ceaae80cb4bc1398c34f7f4501b95dedb60d8985a0de5a0e11bfff353e1249

cni.projectcalico.org/podIP: 10.1.128.212/32

cni.projectcalico.org/podIPs: 10.1.128.212/32

Status: Running

IP: 10.1.128.212

IPs:

IP: 10.1.128.212

Controlled By: ReplicaSet/kubernetes-bootcamp-75c5d958ff

Containers:

kubernetes-bootcamp:

Container ID: containerd://9c8a0824de0cb9f9e1b671925837dac2fd2be882537c6193ef926b025e6de745

Image: gcr.io/google-samples/kubernetes-bootcamp:v1

Image ID: gcr.io/google-samples/kubernetes-bootcamp@sha256:0d6b8ee63bb57c5f5b6156f446b3bc3b3c143d233037f3a2f00e279c8fcc64af

Port: <none>

Host Port: <none>

State: Running

Started: Tue, 06 Dec 2022 16:18:58 +0000

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-8ctqs (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

kube-api-access-8ctqs:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events: <none>쿠버네티스 Pod 환경변수 불러오기

Pod 목록 조회 코드

$ sudo microk8s kubectl get podsPod 목록 조회 결과

$ sudo microk8s kubectl get pods

NAME READY STATUS RESTARTS AGE

kubernetes-bootcamp-75c5d958ff-j4fvb 1/1 Running 0 17mPod의 환경변수 불러오기 코드

$ sudo microk8s kubectl exec Pod이름 -- envPod의 환경변수 불러오기 결과

$ sudo microk8s kubectl exec kubernetes-bootcamp-75c5d958ff-j4fvb -- env

PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

HOSTNAME=kubernetes-bootcamp-75c5d958ff-j4fvb

NPM_CONFIG_LOGLEVEL=info

NODE_VERSION=6.3.1

HELLO_NODE_PORT_8080_TCP_PORT=8080

KUBERNETES_SERVICE_HOST=10.152.183.1

KUBERNETES_PORT_443_TCP_ADDR=10.152.183.1

HELLO_NODE_SERVICE_HOST=10.152.183.70

HELLO_NODE_PORT_8080_TCP_PROTO=tcp

HELLO_NODE_PORT_8080_TCP_ADDR=10.152.183.70

KUBERNETES_SERVICE_PORT_HTTPS=443

KUBERNETES_PORT_443_TCP=tcp://10.152.183.1:443

HELLO_NODE_PORT_8080_TCP=tcp://10.152.183.70:8080

KUBERNETES_SERVICE_PORT=443

KUBERNETES_PORT=tcp://10.152.183.1:443

KUBERNETES_PORT_443_TCP_PROTO=tcp

KUBERNETES_PORT_443_TCP_PORT=443

HELLO_NODE_SERVICE_PORT=8080

HELLO_NODE_PORT=tcp://10.152.183.70:8080

HOME=/root쿠버네티스 Pod의 직접 접속하기

직접 접속하기 코드

$ sudo microk8s kubectl exec -it Pod이름 bash

# cat server.js

# curl localhost:8080kubernets-bootcamp에는 server.js 파일이 내장되어있습니다.

직접 접속하기 결과

$ sudo microk8s kubectl exec -it kubernetes-bootcamp-75c5d958ff-j4fvb bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

root@kubernetes-bootcamp-75c5d958ff-j4fvb:/# cat server.js

var http = require('http');

var requests=0;

var podname= process.env.HOSTNAME;

var startTime;

var host;

var handleRequest = function(request, response) {

response.setHeader('Content-Type', 'text/plain');

response.writeHead(200);

response.write("Hello Kubernetes bootcamp! | Running on: ");

response.write(host);

response.end(" | v=1\n");

console.log("Running On:" ,host, "| Total Requests:", ++requests,"| App Uptime:", (new Date() - startTime)/1000 , "seconds", "| Log Time:",new Date());

}

var www = http.createServer(handleRequest);

www.listen(8080,function () {

startTime = new Date();;

host = process.env.HOSTNAME;

console.log ("Kubernetes Bootcamp App Started At:",startTime, "| Running On: " ,host, "\n" );

});

root@kubernetes-bootcamp-75c5d958ff-j4fvb:/# curl localhost:8080

Hello Kubernetes bootcamp! | Running on: kubernetes-bootcamp-75c5d958ff-j4fvb | v=1쿠버네티스 Pod 로그 불러오기

실행 코드

$ sudo microk8s kubectl logs Pod이름실행 결과

$ sudo microk8s kubectl logs kubernetes-bootcamp-75c5d958ff-j4fvb

Kubernetes Bootcamp App Started At: 2022-12-06T16:18:58.790Z | Running On: kubernetes-bootcamp-75c5d958ff-j4fvb

Running On: kubernetes-bootcamp-75c5d958ff-j4fvb | Total Requests: 1 | App Uptime: 1387.093 seconds | Log Time: 2022-12-06T16:42:05.883Z쿠버네티스 Proxy 실행

Proxy 실행 코드

$ sudo microk8s kubectl proxyProxy 실행 결과

$ sudo microk8s kubectl proxy

Starting to serve on 127.0.0.1:8001Proxy 접속 결과 (http://localhost:8001/version)

$ curl http://localhost:8001/version

{

"major": "1",

"minor": "25",

"gitVersion": "v1.25.4",

"gitCommit": "872a965c6c6526caa949f0c6ac028ef7aff3fb78",

"gitTreeState": "clean",

"buildDate": "2022-11-14T22:13:32Z",

"goVersion": "go1.19.3",

"compiler": "gc",

"platform": "linux/amd64"

}POD_NAME 설정 코드

export POD_NAME=$(sudo microk8s kubectl get pods -o go-template --template '{{range.items}}{{.metadata.name}}{{"\n"}}{{end}}')Microk8s에서 K8s로 변경시 "sudo microk8s"를 제거

Proxy 접속 결과 (http://localhost:8001/api/v1/namespaces/default/pods/$POD_NAME/)

{

"kind": "Pod",

"apiVersion": "v1",

"metadata": {

"name": "kubernetes-bootcamp-75c5d958ff-j4fvb",

"generateName": "kubernetes-bootcamp-75c5d958ff-",

"namespace": "default",

"uid": "b93a52dc-5d7b-4e18-8b0a-fa8664a404fc",

"resourceVersion": "151213",

"creationTimestamp": "2022-12-06T16:18:42Z",

"labels": {

"app": "kubernetes-bootcamp",

"pod-template-hash": "75c5d958ff"

},

"annotations": {

"cni.projectcalico.org/containerID": "f1ceaae80cb4bc1398c34f7f4501b95dedb60d8985a0de5a0e11bfff353e1249",

"cni.projectcalico.org/podIP": "10.1.128.212/32",

"cni.projectcalico.org/podIPs": "10.1.128.212/32"

},

"ownerReferences": [

{

"apiVersion": "apps/v1",

"kind": "ReplicaSet",

"name": "kubernetes-bootcamp-75c5d958ff",

"uid": "3e0d948b-ff27-40ce-822c-98529d1708d7",

"controller": true,

"blockOwnerDeletion": true

}

],

"managedFields": [

{

"manager": "Go-http-client",

"operation": "Update",

"apiVersion": "v1",

"time": "2022-12-06T16:18:42Z",

"fieldsType": "FieldsV1",

"fieldsV1": {

"f:metadata": {

"f:annotations": {

".": {},

"f:cni.projectcalico.org/containerID": {},

"f:cni.projectcalico.org/podIP": {},

"f:cni.projectcalico.org/podIPs": {}

}

}

},

"subresource": "status"

},

{

"manager": "kubelite",

"operation": "Update",

"apiVersion": "v1",

"time": "2022-12-06T16:18:42Z",

"fieldsType": "FieldsV1",

"fieldsV1": {

"f:metadata": {

"f:generateName": {},

"f:labels": {

".": {},

"f:app": {},

"f:pod-template-hash": {}

},

"f:ownerReferences": {

".": {},

"k:{\"uid\":\"3e0d948b-ff27-40ce-822c-98529d1708d7\"}": {}

}

},

"f:spec": {

"f:containers": {

"k:{\"name\":\"kubernetes-bootcamp\"}": {

".": {},

"f:image": {},

"f:imagePullPolicy": {},

"f:name": {},

"f:resources": {},

"f:terminationMessagePath": {},

"f:terminationMessagePolicy": {}

}

},

"f:dnsPolicy": {},

"f:enableServiceLinks": {},

"f:restartPolicy": {},

"f:schedulerName": {},

"f:securityContext": {},

"f:terminationGracePeriodSeconds": {}

}

}

},

{

"manager": "kubelite",

"operation": "Update",

"apiVersion": "v1",

"time": "2022-12-06T16:18:58Z",

"fieldsType": "FieldsV1",

"fieldsV1": {

"f:status": {

"f:conditions": {

"k:{\"type\":\"ContainersReady\"}": {

".": {},

"f:lastProbeTime": {},

"f:lastTransitionTime": {},

"f:status": {},

"f:type": {}

},

"k:{\"type\":\"Initialized\"}": {

".": {},

"f:lastProbeTime": {},

"f:lastTransitionTime": {},

"f:status": {},

"f:type": {}

},

"k:{\"type\":\"Ready\"}": {

".": {},

"f:lastProbeTime": {},

"f:lastTransitionTime": {},

"f:status": {},

"f:type": {}

}

},

"f:containerStatuses": {},

"f:hostIP": {},

"f:phase": {},

"f:podIP": {},

"f:podIPs": {

".": {},

"k:{\"ip\":\"10.1.128.212\"}": {

".": {},

"f:ip": {}

}

},

"f:startTime": {}

}

},

"subresource": "status"

}

]

},

"spec": {

"volumes": [

{

"name": "kube-api-access-8ctqs",

"projected": {

"sources": [

{

"serviceAccountToken": {

"expirationSeconds": 3607,

"path": "token"

}

},

{

"configMap": {

"name": "kube-root-ca.crt",

"items": [

{

"key": "ca.crt",

"path": "ca.crt"

}

]

}

},

{

"downwardAPI": {

"items": [

{

"path": "namespace",

"fieldRef": {

"apiVersion": "v1",

"fieldPath": "metadata.namespace"

}

}

]

}

}

],

"defaultMode": 420

}

}

],

"containers": [

{

"name": "kubernetes-bootcamp",

"image": "gcr.io/google-samples/kubernetes-bootcamp:v1",

"resources": {},

"volumeMounts": [

{

"name": "kube-api-access-8ctqs",

"readOnly": true,

"mountPath": "/var/run/secrets/kubernetes.io/serviceaccount"

}

],

"terminationMessagePath": "/dev/termination-log",

"terminationMessagePolicy": "File",

"imagePullPolicy": "IfNotPresent"

}

],

"restartPolicy": "Always",

"terminationGracePeriodSeconds": 30,

"dnsPolicy": "ClusterFirst",

"serviceAccountName": "default",

"serviceAccount": "default",

"nodeName": "microk8s",

"securityContext": {},

"schedulerName": "default-scheduler",

"tolerations": [

{

"key": "node.kubernetes.io/not-ready",

"operator": "Exists",

"effect": "NoExecute",

"tolerationSeconds": 300

},

{

"key": "node.kubernetes.io/unreachable",

"operator": "Exists",

"effect": "NoExecute",

"tolerationSeconds": 300

}

],

"priority": 0,

"enableServiceLinks": true,

"preemptionPolicy": "PreemptLowerPriority"

},

"status": {

"phase": "Running",

"conditions": [

{

"type": "Initialized",

"status": "True",

"lastProbeTime": null,

"lastTransitionTime": "2022-12-06T16:18:42Z"

},

{

"type": "Ready",

"status": "True",

"lastProbeTime": null,

"lastTransitionTime": "2022-12-06T16:18:58Z"

},

{

"type": "ContainersReady",

"status": "True",

"lastProbeTime": null,

"lastTransitionTime": "2022-12-06T16:18:58Z"

},

{

"type": "PodScheduled",

"status": "True",

"lastProbeTime": null,

"lastTransitionTime": "2022-12-06T16:18:42Z"

}

],

"hostIP": "10.178.0.5",

"podIP": "10.1.128.212",

"podIPs": [

{

"ip": "10.1.128.212"

}

],

"startTime": "2022-12-06T16:18:42Z",

"containerStatuses": [

{

"name": "kubernetes-bootcamp",

"state": {

"running": {

"startedAt": "2022-12-06T16:18:58Z"

}

},

"lastState": {},

"ready": true,

"restartCount": 0,

"image": "gcr.io/google-samples/kubernetes-bootcamp:v1",

"imageID": "gcr.io/google-samples/kubernetes-bootcamp@sha256:0d6b8ee63bb57c5f5b6156f446b3bc3b3c143d233037f3a2f00e279c8fcc64af",

"containerID": "containerd://9c8a0824de0cb9f9e1b671925837dac2fd2be882537c6193ef926b025e6de745",

"started": true

}

],

"qosClass": "BestEffort"

}

}쿠버네티스 서비스 생성

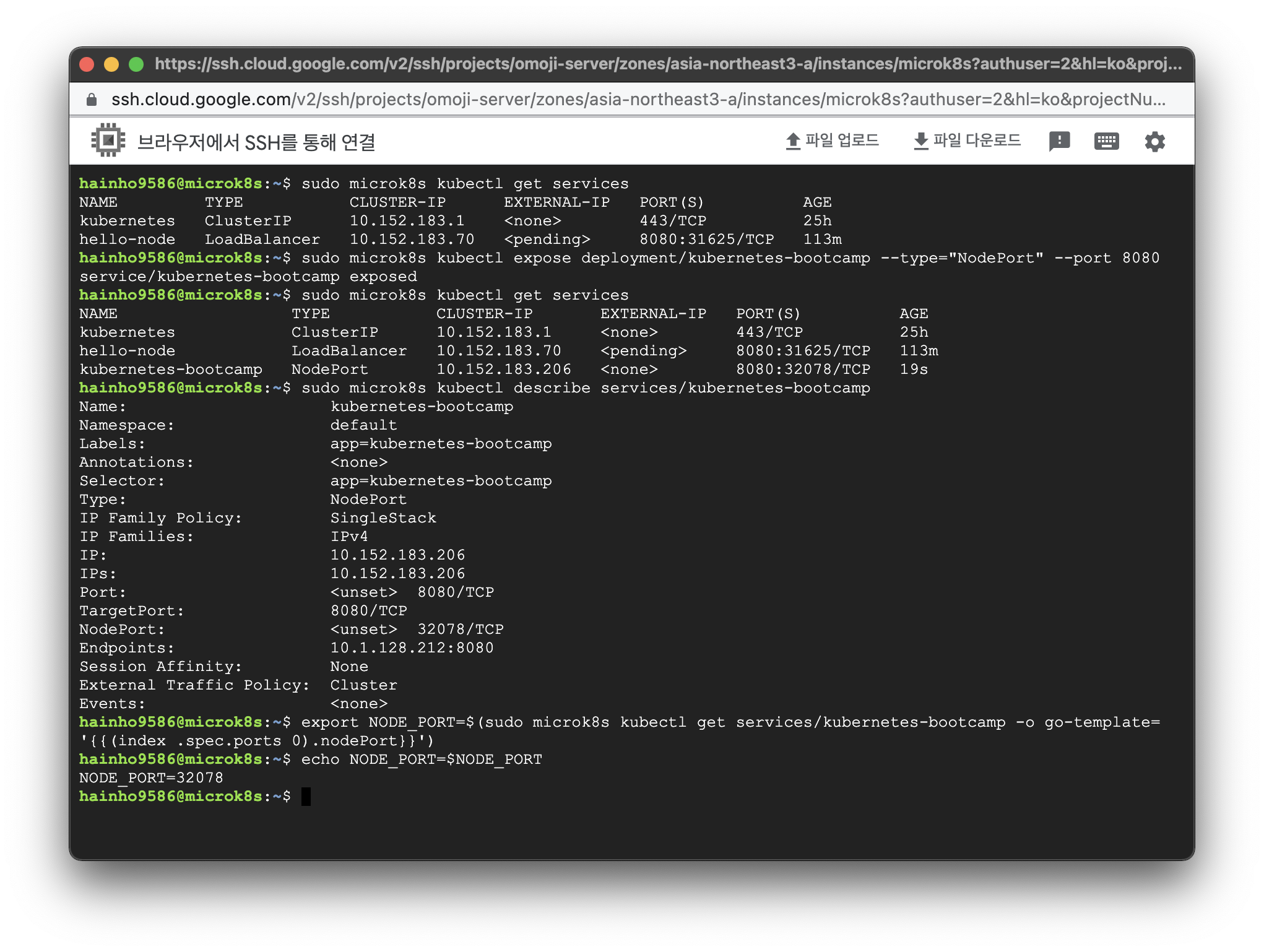

서비스 목록 조회 코드

$ sudo microk8s kubectl get services서비스 생성 코드

$ sudo microk8s kubectl expose deployment/서비스명 --type="타입종류" --port 포트서비스 생성 결과

$ sudo microk8s kubectl expose deployment/kubernetes-bootcamp --type="NodePort" --port 8080

service/kubernetes-bootcamp exposed서비스 목록 조회 결과

$ sudo microk8s kubectl get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.152.183.1 <none> 443/TCP 25h

hello-node LoadBalancer 10.152.183.70 <pending> 8080:31625/TCP 113m서비스 목록 조회 코드

$ sudo microk8s kubectl get services서비스 목록 조회 결과

$ sudo microk8s kubectl get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.152.183.1 <none> 443/TCP 25h

hello-node LoadBalancer 10.152.183.70 <pending> 8080:31625/TCP 113m

kubernetes-bootcamp NodePort 10.152.183.206 <none> 8080:32078/TCP 19s서비스 상세 정보 조회 코드

$ sudo microk8s kubectl describe services/서비스명서비스 상세 정보 조회 결과

$ sudo microk8s kubectl describe services/kubernetes-bootcamp

Name: kubernetes-bootcamp

Namespace: default

Labels: app=kubernetes-bootcamp

Annotations: <none>

Selector: app=kubernetes-bootcamp

Type: NodePort

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.152.183.206

IPs: 10.152.183.206

Port: <unset> 8080/TCP

TargetPort: 8080/TCP

NodePort: <unset> 32078/TCP

Endpoints: 10.1.128.212:8080

Session Affinity: None

External Traffic Policy: Cluster

Events: <none>쿠버네티스 서비스 삭제

서비스 삭제 코드

$ sudo microk8s kubectl delete service -l app=kubernetes-bootcamp서비스 삭제 결과

$ sudo microk8s kubectl delete service -l app=kubernetes-bootcamp

service "kubernetes-bootcamp" deleted서비스 조회 코드

$ sudo microk8s kubectl get services서비스 조회 결과

$ sudo microk8s kubectl get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.152.183.1 <none> 443/TCP 25h

hello-node LoadBalancer 10.152.183.70 <pending> 8080:31625/TCP 128m쿠버네티스 Deployment 상세 정보

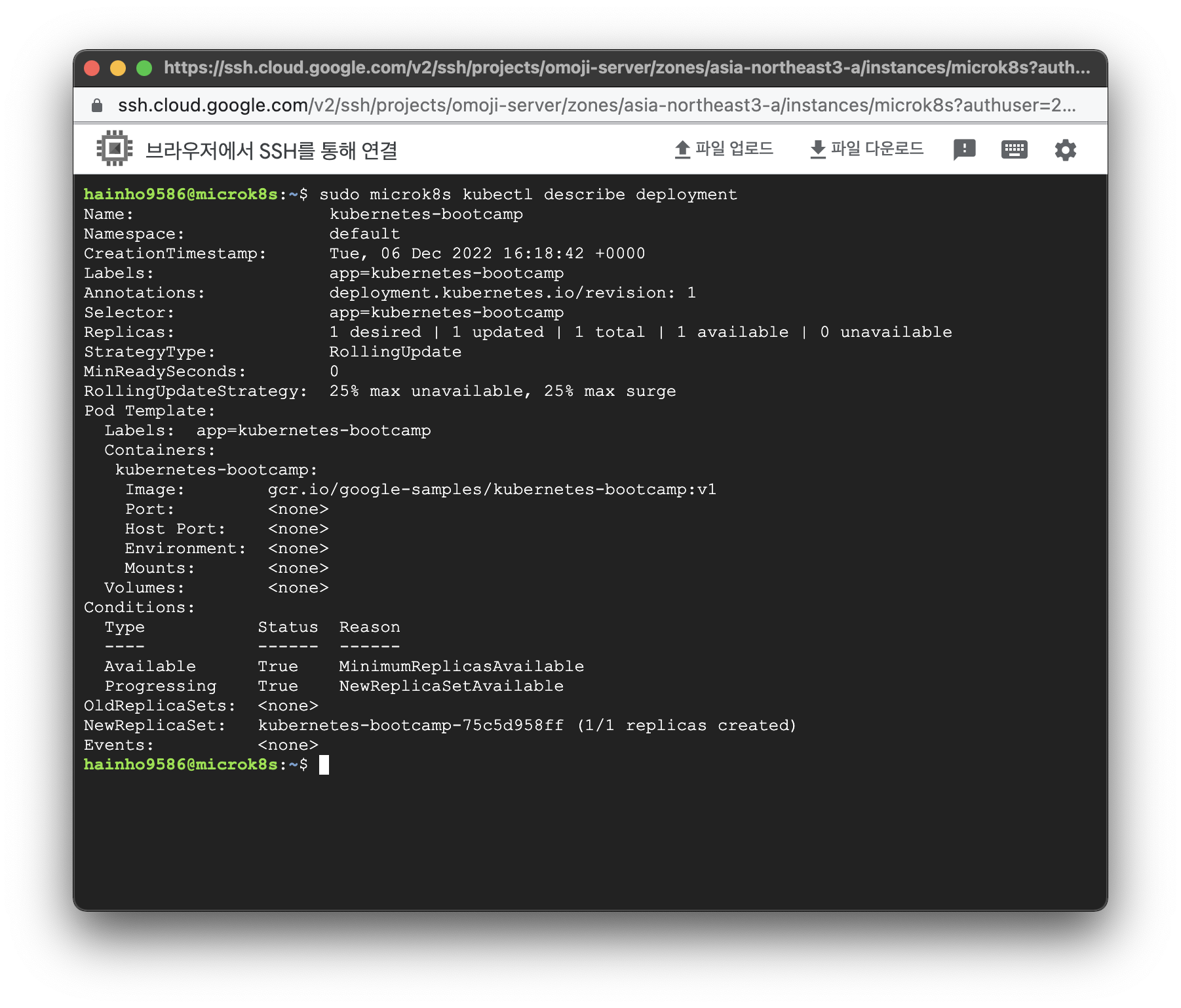

Deployment 상세 정보 조회 코드

$ sudo microk8s kubectl describe deploymentDeployment 상세 정보 조회 결과

$ sudo microk8s kubectl describe deployment

Deployment 상세 정보 조회 코드

$ sudo microk8s kubectl describe deployment

Name: kubernetes-bootcamp

Namespace: default

CreationTimestamp: Tue, 06 Dec 2022 16:18:42 +0000

Labels: app=kubernetes-bootcamp

Annotations: deployment.kubernetes.io/revision: 1

Selector: app=kubernetes-bootcamp

Replicas: 1 desired | 1 updated | 1 total | 1 available | 0 unavailable

StrategyType: RollingUpdate

MinReadySeconds: 0

RollingUpdateStrategy: 25% max unavailable, 25% max surge

Pod Template:

Labels: app=kubernetes-bootcamp

Containers:

kubernetes-bootcamp:

Image: gcr.io/google-samples/kubernetes-bootcamp:v1

Port: <none>

Host Port: <none>

Environment: <none>

Mounts: <none>

Volumes: <none>

Conditions:

Type Status Reason

---- ------ ------

Available True MinimumReplicasAvailable

Progressing True NewReplicaSetAvailable

OldReplicaSets: <none>

NewReplicaSet: kubernetes-bootcamp-75c5d958ff (1/1 replicas created)

Events: <none>쿠버네티스 Label 활용

특정 Pod 조회 코드

$ sudo microk8s kubectl get pods -l app=kubernetes-bootcamp특정 Pod 조회 결과

$ sudo microk8s kubectl get pods -l app=kubernetes-bootcamp

NAME READY STATUS RESTARTS AGE

kubernetes-bootcamp-75c5d958ff-j4fvb 1/1 Running 0 73mPod 이름 설정

$ export POD_NAME=$(sudo microk8s kubectl get pods -o go-template --template '{{range.items}}{{.metadata.name}}{{"\n"}}{{end}}')Pod 이름 출력

$ echo Name of the Pod: $POD_NAME

Name of the Pod: kubernetes-bootcamp-75c5d958ff-j4fvbPod 라벨 설정 코드

$ sudo microk8s kubectl label pods Pod이름 version=버전명Pod 라벨 설정 결과

$ sudo microk8s kubectl label pods $POD_NAME version=v1

pod/kubernetes-bootcamp-75c5d958ff-j4fvb labeled특정 Pod 상세조회 코드

$ sudo microk8s kubectl describe pods Pod이름특정 Pod 상세조회 결과

$ sudo microk8s kubectl describe pods $POD_NAME

Name: kubernetes-bootcamp-75c5d958ff-j4fvb

Namespace: default

Priority: 0

Service Account: default

Node: microk8s/10.178.0.5

Start Time: Tue, 06 Dec 2022 16:18:42 +0000

Labels: app=kubernetes-bootcamp

pod-template-hash=75c5d958ff

version=v1

Annotations: cni.projectcalico.org/containerID: f1ceaae80cb4bc1398c34f7f4501b95dedb60d8985a0de5a0e11bfff353e1249

cni.projectcalico.org/podIP: 10.1.128.212/32

cni.projectcalico.org/podIPs: 10.1.128.212/32

Status: Running

IP: 10.1.128.212

IPs:

IP: 10.1.128.212

Controlled By: ReplicaSet/kubernetes-bootcamp-75c5d958ff

Containers:

kubernetes-bootcamp:

Container ID: containerd://9c8a0824de0cb9f9e1b671925837dac2fd2be882537c6193ef926b025e6de745

Image: gcr.io/google-samples/kubernetes-bootcamp:v1

Image ID: gcr.io/google-samples/kubernetes-bootcamp@sha256:0d6b8ee63bb57c5f5b6156f446b3bc3b3c143d233037f3a2f00e279c8fcc64af

Port: <none>

Host Port: <none>

State: Running

Started: Tue, 06 Dec 2022 16:18:58 +0000

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-8ctqs (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

kube-api-access-8ctqs:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events: <none>특정 버전의 Pod 조회 코드

$ sudo microk8s kubectl get pods -l version=v1특정 버전의 Pod 조회 결과

$ sudo microk8s kubectl get pods -l version=v1

NAME READY STATUS RESTARTS AGE

kubernetes-bootcamp-75c5d958ff-j4fvb 1/1 Running 0 75m쿠버네티스 Scale Up

Deployments 정보 불러오기 코드

$ sudo microk8s kubectl get deploymentsDeployments 정보 불러오기 결과

$ sudo microk8s kubectl get deployments

NAME READY UP-TO-DATE AVAILABLE AGE

kubernetes-bootcamp 1/1 1 1 91mReplicasets 정보 불러오기 코드

$ sudo microk8s kubectl get replicasetsReplicasets 정보 불러오기 결과

sudo microk8s kubectl get replicasets

NAME DESIRED CURRENT READY AGE

kubernetes-bootcamp-75c5d958ff 1 1 1 91mScale up 코드

$ sudo microk8s kubectl scale deployments/Pod이름 --replicas=개수replicas 수를 4개로 증가

Scale up 결과

$ sudo microk8s kubectl scale deployments/kubernetes-bootcamp --replicas=4

deployment.apps/kubernetes-bootcamp scaledPod의 추가 정보를 출력하는 코드

$ sudo microk8s kubectl get pods -o widePod의 추가 정보를 출력하는 결과

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kubernetes-bootcamp-75c5d958ff-j4fvb 1/1 Running 0 92m 10.1.128.212 microk8s <none> <none>

kubernetes-bootcamp-75c5d958ff-pftb8 1/1 Running 0 10s 10.1.128.215 microk8s <none> <none>

kubernetes-bootcamp-75c5d958ff-2kngn 1/1 Running 0 10s 10.1.128.213 microk8s <none> <none>

kubernetes-bootcamp-75c5d958ff-r2lvl 1/1 Running 0 10s 10.1.128.214 microk8s <none> <none>Pod의 상세 정보를 출력하는 코드

$ sudo microk8s kubectl describe deployments/Pod이름Pod의 상세 정보를 출력 결과

$ sudo microk8s kubectl describe deployments/kubernetes-bootcamp

Name: kubernetes-bootcamp

Namespace: default

CreationTimestamp: Tue, 06 Dec 2022 16:18:42 +0000

Labels: app=kubernetes-bootcamp

Annotations: deployment.kubernetes.io/revision: 1

Selector: app=kubernetes-bootcamp

Replicas: 4 desired | 4 updated | 4 total | 4 available | 0 unavailable

StrategyType: RollingUpdate

MinReadySeconds: 0

RollingUpdateStrategy: 25% max unavailable, 25% max surge

Pod Template:

Labels: app=kubernetes-bootcamp

Containers:

kubernetes-bootcamp:

Image: gcr.io/google-samples/kubernetes-bootcamp:v1

Port: <none>

Host Port: <none>

Environment: <none>

Mounts: <none>

Volumes: <none>

Conditions:

Type Status Reason

---- ------ ------

Progressing True NewReplicaSetAvailable

Available True MinimumReplicasAvailable

OldReplicaSets: <none>

NewReplicaSet: kubernetes-bootcamp-75c5d958ff (4/4 replicas created)

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal ScalingReplicaSet 23s deployment-controller Scaled up replica set kubernetes-bootcamp-75c5d958ff to 4 from 1쿠버네티스 Scale Down

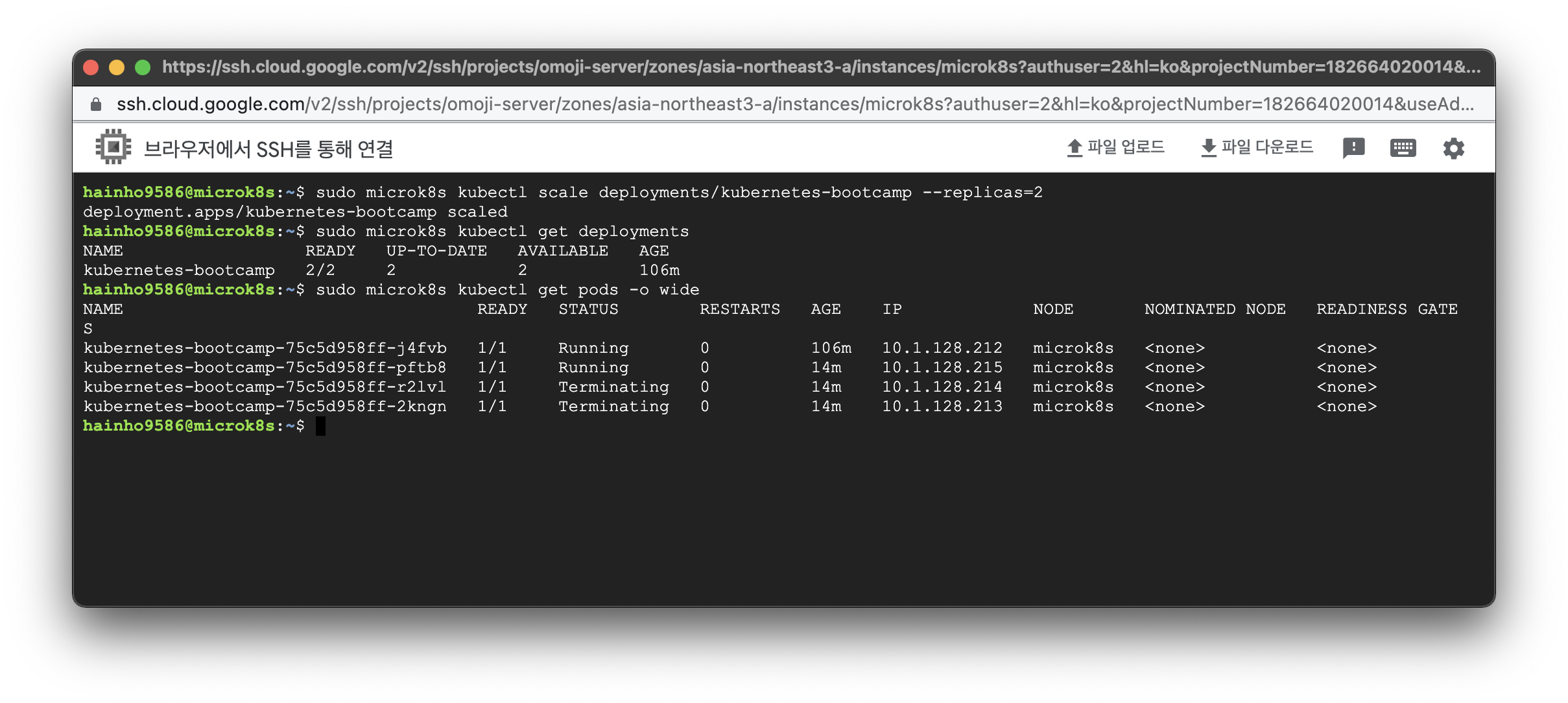

Scale Down 코드

$ sudo microk8s kubectl scale deployments/Pod명 --replicas=개수Scale Down 코드 결과

$ sudo microk8s kubectl scale deployments/kubernetes-bootcamp --replicas=2

deployment.apps/kubernetes-bootcamp scaledDeployments 정보 조회 코드

$ sudo microk8s kubectl get deploymentsDeployments 정보 조회 결과

$ sudo microk8s kubectl get deployments

NAME READY UP-TO-DATE AVAILABLE AGE

kubernetes-bootcamp 2/2 2 2 106mPods 추가 정보 조회 코드

$ sudo microk8s kubectl get pods -o widePods 추가 정보 조회 결과

$ sudo microk8s kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kubernetes-bootcamp-75c5d958ff-j4fvb 1/1 Running 0 106m 10.1.128.212 microk8s <none> <none>

kubernetes-bootcamp-75c5d958ff-pftb8 1/1 Running 0 14m 10.1.128.215 microk8s <none> <none>

kubernetes-bootcamp-75c5d958ff-r2lvl 1/1 Terminating 0 14m 10.1.128.214 microk8s <none> <none>

kubernetes-bootcamp-75c5d958ff-2kngn 1/1 Terminating 0 14m 10.1.128.213 microk8s <none> <none>Scale Down 이후 기존 컨테이너가 Terminating 되기 이전에 조회한 경우

$ sudo microk8s kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kubernetes-bootcamp-75c5d958ff-j4fvb 1/1 Running 0 113m 10.1.128.212 microk8s <none> <none>

kubernetes-bootcamp-75c5d958ff-pftb8 1/1 Running 0 21m 10.1.128.215 microk8s <none> <none>Scale Down 이후 기존 컨테이너가 Terminating 되고 이후에 조회한 경우

쿠버네티스 Rolling update

도커 이미지 설정 코드

$ sudo microk8s kubectl set image deployments/kubernetes-bootcamp kubernetes-bootcamp=jocatalin/kubernetes-bootcamp:v2도커 이미지 설정 결과

$ sudo microk8s kubectl set image deployments/kubernetes-bootcamp kubernetes-bootcamp=jocatalin/kubernetes-bootcamp:v2

deployment.apps/kubernetes-bootcamp image updatedRoll out 코드

$ sudo microk8s kubectl rollout status deployments/kubernetes-bootcampRoll out 결과

$ sudo microk8s kubectl rollout status deployments/kubernetes-bootcamp

deployment "kubernetes-bootcamp" successfully rolled out도커 이미지 설정 코드

$ sudo microk8s kubectl set image deployments/kubernetes-bootcamp kubernetes-bootcamp=gcr.io/google-samples/kubernetes-bootcamp:v10도커 이미지 설정 결과

$ sudo microk8s kubectl set image deployments/kubernetes-bootcamp kubernetes-bootcamp=gcr.io/google-samples/kubernetes-bootcamp:v10

deployment.apps/kubernetes-bootcamp image updatedRoll out 실행 취소 코드

$ sudo microk8s kubectl rollout undo deployments/kubernetes-bootcampRoll out 실행 취소 결과

$ sudo microk8s kubectl rollout undo deployments/kubernetes-bootcamp

deployment.apps/kubernetes-bootcamp rolled backYaml을 사용하여 배포하기

배포해볼 yaml 파일 코드

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.14.2

ports:

- containerPort: 80Yaml 배포 코드

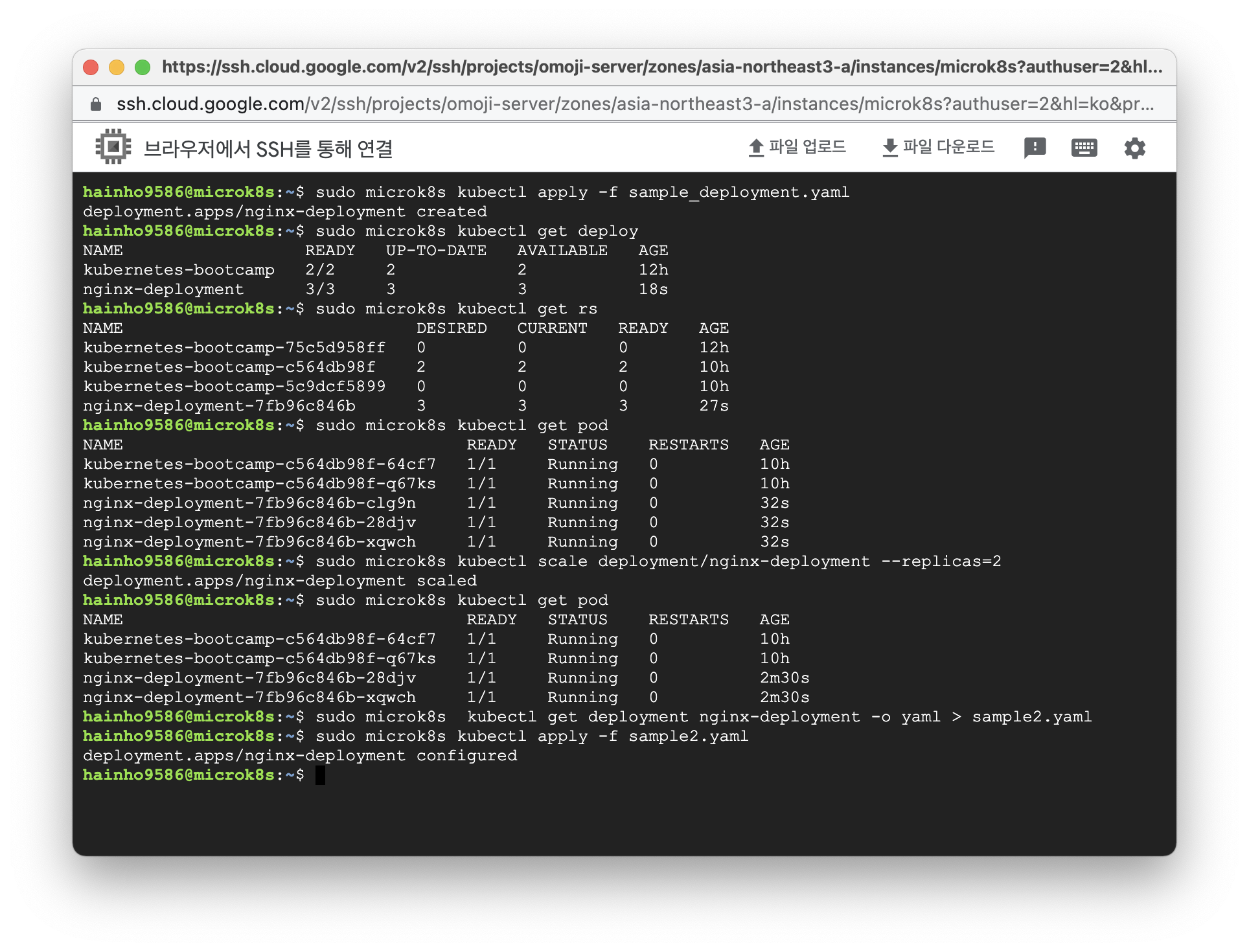

$ sudo microk8s kubectl apply -f Yaml파일명Yaml 배포 결과

$ sudo microk8s kubectl apply -f sample_deployment.yaml

deployment.apps/nginx-deployment createdDeployment 조회 코드

$ sudo microk8s kubectl get deployDeployment 조회 결과

$ sudo microk8s kubectl get deploy

NAME READY UP-TO-DATE AVAILABLE AGE

kubernetes-bootcamp 2/2 2 2 12h

nginx-deployment 3/3 3 3 15sReplicaSet 정보 조회 코드

$ sudo microk8s kubectl get rsReplicaSet 정보 조회 결과

$ sudo microk8s kubectl get rs

NAME DESIRED CURRENT READY AGE

kubernetes-bootcamp-75c5d958ff 0 0 0 12h

kubernetes-bootcamp-c564db98f 2 2 2 10h

kubernetes-bootcamp-5c9dcf5899 0 0 0 10h

nginx-deployment-7fb96c846b 3 3 3 23sPod 정보 조회 코드

$ sudo microk8s kubectl get podPod 정보 조회 결과

$ sudo microk8s kubectl get pod

NAME READY STATUS RESTARTS AGE

kubernetes-bootcamp-c564db98f-64cf7 1/1 Running 0 10h

kubernetes-bootcamp-c564db98f-q67ks 1/1 Running 0 10h

nginx-deployment-7fb96c846b-6cv7f 1/1 Running 0 31s

nginx-deployment-7fb96c846b-6xczm 1/1 Running 0 31s

nginx-deployment-7fb96c846b-qc55d 1/1 Running 0 31s기존 ReplicaSet 수는 3개

ReplicaSet 수를 2개로 스케일 조정 코드

$ sudo microk8s kubectl scale deployment/이름 --replicas=개수ReplicaSet 수를 2개로 스케일 조정 결과

$ sudo microk8s kubectl scale deployment/nginx-deployment --replicas=2

deployment.apps/nginx-deployment scaled새로운 변경 사항을 반영한 Yaml 추출

$ sudo microk8s kubectl get deployment nginx-deployment -o yaml > sample.yaml생성된 파일 확인 코드

$ ls

sample.yaml sample_deployment.yaml- sample.yaml은 새로운 스펙이 반영된 파일

- sample_deployment.yaml은 기존 스펙이 담겨있는 파일

Yaml 파일을 기반으로 업데이트 하는 코드

$ sudo microk8s kubectl apply -f sample.yamlkubectl create는 yaml 파일안에 모든 정보를 기술하고 이미 관련 리소스가 실행 중이면 오류를 띄운다.

apply 명령어는 이미 리소스가 생성되어 있어도 변경된 사항에 대해서만 업데이트를 진행한다.

RESTApi와 비교해보자면 Create의 경우 POST이고 Apply는 Patch이다.

Yaml 파일을 기반으로 업데이트 하는 결과

$ sudo microk8s kubectl apply -f sample.yaml

deployment.apps/nginx-deployment configured새로운 스펙이 반영된 Yaml 파일

$ cat sample.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

annotations:

deployment.kubernetes.io/revision: "1"

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"apps/v1","kind":"Deployment","metadata":{"annotations":{},"labels":{"app":"nginx"},"name":"nginx-deployment","namespace":"default"},"spec":{"replicas":3,"selector":{"matchLabels":{"app":"nginx"}},"template":{"metadata":{"labels":{"app":"nginx"}},"spec":{"containers":[{"image":"nginx:1.14.2","name":"nginx","ports":[{"containerPort":80}]}]}}}}

creationTimestamp: "2022-12-07T05:03:26Z"

generation: 2

labels:

app: nginx

name: nginx-deployment

namespace: default

resourceVersion: "229329"

uid: f53c36e4-9524-4f37-a870-1faf0b221a49

spec:

progressDeadlineSeconds: 600

replicas: 2

revisionHistoryLimit: 10

selector:

matchLabels:

app: nginx

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

template:

metadata:

creationTimestamp: null

labels:

app: nginx

spec:

containers:

- image: nginx:1.14.2

imagePullPolicy: IfNotPresent

name: nginx

ports:

- containerPort: 80

protocol: TCP

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

dnsPolicy: ClusterFirst

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

status:

availableReplicas: 2

conditions:

- lastTransitionTime: "2022-12-07T05:03:29Z"

lastUpdateTime: "2022-12-07T05:03:29Z"

message: Deployment has minimum availability.

reason: MinimumReplicasAvailable

status: "True"

type: Available

- lastTransitionTime: "2022-12-07T05:03:26Z"

lastUpdateTime: "2022-12-07T05:03:29Z"

message: ReplicaSet "nginx-deployment-7fb96c846b" has successfully progressed.

reason: NewReplicaSetAvailable

status: "True"

type: Progressing

observedGeneration: 2

readyReplicas: 2

replicas: 2

updatedReplicas: 2- cat 명령어로 sample.yaml 내용 확인

- replicas 값이 3개에서 2개로 변경된걸 볼 수 있습니다.

Volume, Docker Volume, Kubernetes Volume 설정

Volume 기반: Root 계정 접속

$ sudo suVolume 기반: 폴더 세부내용 확인 코드

# ls

sample sample.yaml sample_deployment.yaml snap test.txtVolume 기반: 물리폴더-컨테이너폴더 연결 및 컨테이너 접속 코드

# sudo docker run -it -v /home/hainho9586/sample:/data 도커이미지명 /bin/bashVolume 기반: 물리폴더-컨테이너폴더 연결 및 컨테이너 접속 결과

# sudo docker run -it -v /home/hainho9586/sample:/data centos /bin/bash

Unable to find image 'centos:latest' locally

latest: Pulling from library/centos

a1d0c7532777: Pull complete

Digest: sha256:a27fd8080b517143cbbbab9dfb7c8571c40d67d534bbdee55bd6c473f432b177

Status: Downloaded newer image for centos:latestVolume 기반: 컨테이너 내 파일 목록 확인

# ls -al

total 60

drwxr-xr-x 1 root root 4096 Dec 7 08:39 .

drwxr-xr-x 1 root root 4096 Dec 7 08:39 ..

-rwxr-xr-x 1 root root 0 Dec 7 08:39 .dockerenv

lrwxrwxrwx 1 root root 7 Nov 3 2020 bin -> usr/bin

drwxrwxr-x 2 systemd-coredump 1002 4096 Dec 7 08:30 data

drwxr-xr-x 5 root root 360 Dec 7 08:39 dev

drwxr-xr-x 1 root root 4096 Dec 7 08:39 etc

drwxr-xr-x 2 root root 4096 Nov 3 2020 home

lrwxrwxrwx 1 root root 7 Nov 3 2020 lib -> usr/lib

lrwxrwxrwx 1 root root 9 Nov 3 2020 lib64 -> usr/lib64

drwx------ 2 root root 4096 Sep 15 2021 lost+found

drwxr-xr-x 2 root root 4096 Nov 3 2020 media

drwxr-xr-x 2 root root 4096 Nov 3 2020 mnt

drwxr-xr-x 2 root root 4096 Nov 3 2020 opt

dr-xr-xr-x 231 root root 0 Dec 7 08:39 proc

dr-xr-x--- 2 root root 4096 Sep 15 2021 root

drwxr-xr-x 11 root root 4096 Sep 15 2021 run

lrwxrwxrwx 1 root root 8 Nov 3 2020 sbin -> usr/sbin

drwxr-xr-x 2 root root 4096 Nov 3 2020 srv

dr-xr-xr-x 13 root root 0 Dec 7 08:39 sys

drwxrwxrwt 7 root root 4096 Sep 15 2021 tmp

drwxr-xr-x 12 root root 4096 Sep 15 2021 usr

drwxr-xr-x 20 root root 4096 Sep 15 2021 varVolume 기반: 컨테이너와 연결한 data 폴더 및 파일 확인

# ls data/

test.txt

# cat test.txt

Hello world!Docker Volume 기반

Docker Volume 기반: Volume 생성 코드

$ sudo docker volume create --name 볼륨명Docker Volume 기반: Volume 생성 결과

$ sudo docker volume create --name hello

helloDocker Volume 기반: Volume 점검 코드

$ sudo docker volume inspect 볼륨명Docker Volume 기반: Volume 점검 결과

$ sudo docker volume inspect hello

[

{

"CreatedAt": "2022-12-07T10:06:49Z",

"Driver": "local",

"Labels": {},

"Mountpoint": "/var/snap/docker/common/var-lib-docker/volumes/hello/_data",

"Name": "hello",

"Options": {},

"Scope": "local"

}

]Docker Volume 기반: Volume 조회 코드

$ sudo docker volume ls

DRIVER VOLUME NAME

local helloDocker Volume 기반: 컨테이너를 시작하면서 볼륨을 연결하고 컨테이너에 접속까지 하는 코드

$ sudo docker run -it -v 볼륨명:/컨테이너내부폴더경로 도커이미지명 /bin/bashDocker Volume 기반: 컨테이너를 시작하면서 볼륨을 연결하고 컨테이너에 접속까지 하는 결과

$ sudo docker run -it -v hello:/world ubuntu /bin/bash

Unable to find image 'ubuntu:latest' locally

latest: Pulling from library/ubuntu

e96e057aae67: Pull complete

Digest: sha256:4b1d0c4a2d2aaf63b37111f34eb9fa89fa1bf53dd6e4ca954d47caebca4005c2

Status: Downloaded newer image for ubuntu:latestDocker Volume 기반: 컨테이너 내의 폴더 확인

# ls

bin boot dev etc home lib lib32 lib64 libx32 media mnt opt proc root run sbin srv sys tmp usr var world

# ls world/Docker Volume 기반: 불륨 제거 코드

$ sudo docker volume rm 볼륨명Docker Volume 기반: 불륨 제거 결과

$ sudo docker volume rm hello

helloKubernetes Volume 기반

Kubernetes Volume 기반: 두개 컨테이너를 실행하는 Yaml 파일 생성

apiVersion: v1

kind: Pod

metadata:

name: two-containers

spec:

restartPolicy: Never

volumes:

- name: shared-data

emptyDir: {}

containers:

- name: nginx-container

image: nginx

volumeMounts:

- name: shared-data

mountPath: /usr/share/nginx/html

- name: debian-container

image: debian

volumeMounts:

- name: shared-data

mountPath: /pod-data

command: ["/bin/sh"]

args: ["-c", "echo debian 컨테이너에서 안녕하세요 > /pod-data/index.html"]- 파일명: two-container-pod.yaml

- 로컬로 제작가능하지만, 쿠버네티스 공식 홈페이지의 샘플을 활용

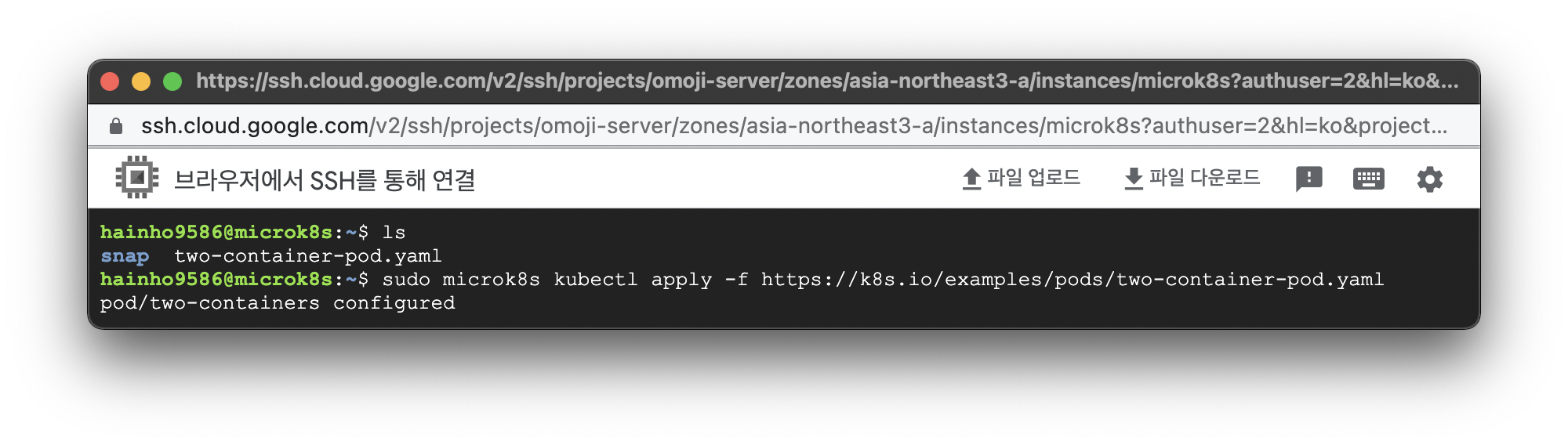

Kubernetes Volume 기반: Yaml 파일 기반으로 컨테이너 실행 코드

sudo microk8s kubectl apply -f https://k8s.io/examples/pods/two-container-pod.yamlKubernetes Volume 기반: Yaml 파일 기반으로 컨테이너 실행 결과

$ sudo microk8s kubectl apply -f https://k8s.io/examples/pods/two-container-pod.yaml

pod/two-containers configuredKubernetes Volume 기반: Pod와 Container 정보 확인 코드

$ sudo microk8s kubectl get pod 파드명 --output=출력형식Kubernetes Volume 기반: Pod와 Container 정보 확인 결과

$ sudo microk8s kubectl get pod two-containers --output=yaml

apiVersion: v1

kind: Pod

metadata:

annotations:

cni.projectcalico.org/containerID: 634492887c1d7535ce43848b7b8cea064a47949e1ae14dd0b4d8e56298d31384

cni.projectcalico.org/podIP: 10.1.128.235/32

cni.projectcalico.org/podIPs: 10.1.128.235/32

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","kind":"Pod","metadata":{"annotations":{},"name":"two-containers","namespace":"default"},"spec":{"containers":[{"image":"nginx","name":"nginx-container","volumeMounts":[{"mountPath":"/usr/share/nginx/html","name":"shared-data"}]},{"args":["-c","echo Hello from the debian container \u003e /pod-data/index.html"],"command":["/bin/sh"],"image":"debian","name":"debian-container","volumeMounts":[{"mountPath":"/pod-data","name":"shared-data"}]}],"restartPolicy":"Never","volumes":[{"emptyDir":{},"name":"shared-data"}]}}

creationTimestamp: "2022-12-07T11:09:13Z"

name: two-containers

namespace: default

resourceVersion: "266569"

uid: 423f7b2a-9e15-4982-9e1c-55b8e1998c54

spec:

containers:

- image: nginx

imagePullPolicy: Always

name: nginx-container

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /usr/share/nginx/html

name: shared-data

- mountPath: /var/run/secrets/kubernetes.io/serviceaccount

name: kube-api-access-hvt8k

readOnly: true

- args:

- -c

- echo Hello from the debian container > /pod-data/index.html

command:

- /bin/sh

image: debian

imagePullPolicy: Always

name: debian-container

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /pod-data

name: shared-data

- mountPath: /var/run/secrets/kubernetes.io/serviceaccount

name: kube-api-access-hvt8k

readOnly: true

dnsPolicy: ClusterFirst

enableServiceLinks: true

nodeName: microk8s

preemptionPolicy: PreemptLowerPriority

priority: 0

restartPolicy: Never

schedulerName: default-scheduler

securityContext: {}

serviceAccount: default

serviceAccountName: default

terminationGracePeriodSeconds: 30

tolerations:

- effect: NoExecute

key: node.kubernetes.io/not-ready

operator: Exists

tolerationSeconds: 300

- effect: NoExecute

key: node.kubernetes.io/unreachable

operator: Exists

tolerationSeconds: 300

volumes:

- emptyDir: {}

name: shared-data

- name: kube-api-access-hvt8k

projected:

defaultMode: 420

sources:

- serviceAccountToken:

expirationSeconds: 3607

path: token

- configMap:

items:

- key: ca.crt

path: ca.crt

name: kube-root-ca.crt

- downwardAPI:

items:

- fieldRef:

apiVersion: v1

fieldPath: metadata.namespace

path: namespace

status:

conditions:

- lastProbeTime: null

lastTransitionTime: "2022-12-07T11:09:14Z"

status: "True"

type: Initialized

- lastProbeTime: null

lastTransitionTime: "2022-12-07T11:09:28Z"

message: 'containers with unready status: [debian-container]'

reason: ContainersNotReady

status: "False"

type: Ready

- lastProbeTime: null

lastTransitionTime: "2022-12-07T11:09:28Z"

message: 'containers with unready status: [debian-container]'

reason: ContainersNotReady

status: "False"

type: ContainersReady

- lastProbeTime: null

lastTransitionTime: "2022-12-07T11:09:13Z"

status: "True"

type: PodScheduled

containerStatuses:

- containerID: containerd://b21a4c6971638064dd07d6256713dc4a00802f0e3966ec62defb7bbfcd478bab

image: docker.io/library/debian:latest

imageID: docker.io/library/debian@sha256:a288aa7ad0e4d443e86843972c25a02f99e9ad6ee589dd764895b2c3f5a8340b

lastState: {}

name: debian-container

ready: false

restartCount: 0

started: false

state:

terminated:

containerID: containerd://b21a4c6971638064dd07d6256713dc4a00802f0e3966ec62defb7bbfcd478bab

exitCode: 0

finishedAt: "2022-12-07T11:09:26Z"

reason: Completed

startedAt: "2022-12-07T11:09:26Z"

- containerID: containerd://1e78f0624e627054caacdd2ad9c55cc8e8d71285042f9ab4f40b10ee4ae85dd2

image: docker.io/library/nginx:latest

imageID: docker.io/library/nginx@sha256:ab589a3c466e347b1c0573be23356676df90cd7ce2dbf6ec332a5f0a8b5e59db

lastState: {}

name: nginx-container

ready: true

restartCount: 0

started: true

state:

running:

startedAt: "2022-12-07T11:09:18Z"

hostIP: 10.178.0.5

phase: Running

podIP: 10.1.128.235

podIPs:

- ip: 10.1.128.235

qosClass: BestEffort

startTime: "2022-12-07T11:09:14Z"Kubernetes Volume 기반: 특정 Pod에 존재하는 컨테이너에 접속 코드

$ sudo microk8s kubectl exec -it Pod명 -c 컨테이너명 -- /bin/bashKubernetes Volume 기반: 특정 Pod에 존재하는 컨테이너에 접속 결과

$ sudo microk8s kubectl exec -it two-containers -c nginx-container -- /bin/bash

root@two-containers:/# Kubernetes Volume 기반: nginx 웹 서버가 실행 중인지 확인한다.

$ sudo microk8s kubectl exec -it Pod명 -c 컨테이너명 -- /bin/bashKubernetes Volume 기반: 특정 Pod에 존재하는 컨테이너에 접속 결과

$ sudo microk8s kubectl exec -it two-containers -c nginx-container -- /bin/bash

root@two-containers:/# 해당 코드는 nginx 컨테이너의 shell을 실행

Kubernetes Volume 기반: 특정 Pod에 존재하는 컨테이너에 접속 결과

$ sudo microk8s kubectl exec -it two-containers -c nginx-container -- /bin/bashKubernetes Volume 기반: nginx 웹 서버가 실행 중인지 확인 코드

root@two-containers:/# apt-get update

root@two-containers:/# apt-get install curl procps

root@two-containers:/# ps aux

root@two-containers:/# curl localhost- API를 요청할 수 있는 패키지를 설치한다

- curl localhost로 HTTP GET 요청을 전달한다.

- 결과를 확인하여 nginx가 제대로 구동되고 있음을 확인한다.

Kubernetes Volume 기반: nginx 웹 서버가 실행 중인지 확인 결과

root@two-containers:/# apt-get update

Get:1 http://deb.debian.org/debian bullseye InRelease [116 kB]

Get:2 http://deb.debian.org/debian-security bullseye-security InRelease [48.4 kB]

Get:3 http://deb.debian.org/debian bullseye-updates InRelease [44.1 kB]

Get:4 http://deb.debian.org/debian bullseye/main amd64 Packages [8184 kB]

Get:5 http://deb.debian.org/debian-security bullseye-security/main amd64 Packages [209 kB]

Get:6 http://deb.debian.org/debian bullseye-updates/main amd64 Packages [14.6 kB]

Fetched 8615 kB in 2s (4001 kB/s)

Reading package lists... Done

root@two-containers:/# apt-get install curl procps

Reading package lists... Done

Building dependency tree... Done

Reading state information... Done

curl is already the newest version (7.74.0-1.3+deb11u3).

The following additional packages will be installed:

libgpm2 libncurses6 libncursesw6 libprocps8 psmisc

Suggested packages:

gpm

The following NEW packages will be installed:

libgpm2 libncurses6 libncursesw6 libprocps8 procps psmisc

0 upgraded, 6 newly installed, 0 to remove and 0 not upgraded.

Need to get 1034 kB of archives.

After this operation, 3474 kB of additional disk space will be used.

Do you want to continue? [Y/n] Y

Get:1 http://deb.debian.org/debian bullseye/main amd64 libncurses6 amd64 6.2+20201114-2 [102 kB]

Get:2 http://deb.debian.org/debian bullseye/main amd64 libncursesw6 amd64 6.2+20201114-2 [132 kB]

Get:3 http://deb.debian.org/debian bullseye/main amd64 libprocps8 amd64 2:3.3.17-5 [63.9 kB]

Get:4 http://deb.debian.org/debian bullseye/main amd64 procps amd64 2:3.3.17-5 [502 kB]

Get:5 http://deb.debian.org/debian bullseye/main amd64 libgpm2 amd64 1.20.7-8 [35.6 kB]

Get:6 http://deb.debian.org/debian bullseye/main amd64 psmisc amd64 23.4-2 [198 kB]

Fetched 1034 kB in 0s (3526 kB/s)

debconf: delaying package configuration, since apt-utils is not installed

Selecting previously unselected package libncurses6:amd64.

(Reading database ... 7823 files and directories currently installed.)

Preparing to unpack .../0-libncurses6_6.2+20201114-2_amd64.deb ...

Unpacking libncurses6:amd64 (6.2+20201114-2) ...

Selecting previously unselected package libncursesw6:amd64.

Preparing to unpack .../1-libncursesw6_6.2+20201114-2_amd64.deb ...

Unpacking libncursesw6:amd64 (6.2+20201114-2) ...

Selecting previously unselected package libprocps8:amd64.

Preparing to unpack .../2-libprocps8_2%3a3.3.17-5_amd64.deb ...

Unpacking libprocps8:amd64 (2:3.3.17-5) ...

Selecting previously unselected package procps.

Preparing to unpack .../3-procps_2%3a3.3.17-5_amd64.deb ...

Unpacking procps (2:3.3.17-5) ...

Selecting previously unselected package libgpm2:amd64.

Preparing to unpack .../4-libgpm2_1.20.7-8_amd64.deb ...

Unpacking libgpm2:amd64 (1.20.7-8) ...

Selecting previously unselected package psmisc.

Preparing to unpack .../5-psmisc_23.4-2_amd64.deb ...

Unpacking psmisc (23.4-2) ...

Setting up libgpm2:amd64 (1.20.7-8) ...

Setting up psmisc (23.4-2) ...

Setting up libncurses6:amd64 (6.2+20201114-2) ...

Setting up libncursesw6:amd64 (6.2+20201114-2) ...

Setting up libprocps8:amd64 (2:3.3.17-5) ...

Setting up procps (2:3.3.17-5) ...

Processing triggers for libc-bin (2.31-13+deb11u5) ...

root@two-containers:/# ps aux

USER PID %CPU %MEM VSZ RSS TTY STAT START TIME COMMAND

root 1 0.0 0.1 8932 6096 ? Ss 11:09 0:00 nginx: master process nginx -g daemon off;

nginx 28 0.0 0.0 9320 2500 ? S 11:09 0:00 nginx: worker process

nginx 29 0.0 0.0 9320 2500 ? S 11:09 0:00 nginx: worker process

root 30 0.0 0.0 4164 3412 pts/0 Ss 11:41 0:00 /bin/bash

root 372 0.0 0.0 6760 2940 pts/0 R+ 11:46 0:00 ps aux

root@two-containers:/# curl localhost

Hello from the debian container- 마지막에 HTTP GET 요청시 사전에 작성한 "Hello from the debian container"를 반환한다.

Helm Chart

Microk8s Helm 활성화하기

$ sudo microk8s enable helm- Microk8s의 Helm은 에드온을 활성화하면 사용할 수 있다.

Microk8s Helm Chart 생성하기 코드

$ sudo microk8s helm create 차트이름Microk8s Helm Chart 생성하기 결과

$ sudo microk8s helm create mychart

Creating mychartMicrok8s Helm 파일 구조 코드

$ ls 차트명

Chart.yaml charts templates values.yamlMicrok8s Helm 파일 구조 결과

$ ls mychart/

Chart.yaml charts templates values.yaml- Chart.yaml: chart의 전체적인 정보를 포함하고 있는 파일이다.

- charts: 초기에는 비어있으며 차트에 차트를 포함시킬때 활용한다.

- templates/: 차트를 쿠버네티스에 배정될때 필요한 리소스를 담고 있다.

- values.yaml: 사용자가 원하는 설정값을 담을 수 있는 파일이다.

Microk8s Helm templates/service.yaml 파일

$ cat mychart/templates/service.yaml

apiVersion: v1

kind: Service

metadata:

name: {{ include "mychart.fullname" . }}

labels:

{{- include "mychart.labels" . | nindent 4 }}

spec:

type: {{ .Values.service.type }}

ports:

- port: {{ .Values.service.port }}

targetPort: http

protocol: TCP

name: http

selector:

{{- include "mychart.selectorLabels" . | nindent 4 }}- Values는 아래 values.yml의 service.type과 service.port에서 정보를 불러온다.

Microk8s Helm values.yaml 파일

replicaCount: 1

image:

repository: nginx

pullPolicy: IfNotPresent

tag: ""

imagePullSecrets: []

nameOverride: ""

fullnameOverride: ""

serviceAccount:

create: true

annotations: {}

name: ""

podAnnotations: {}

podSecurityContext: {}

securityContext: {}

service:

type: ClusterIP

port: 80

ingress:

enabled: false

className: ""

annotations: {}

hosts:

- host: chart-example.local

paths:

- path: /

pathType: ImplementationSpecific

tls: []

resources: {}

autoscaling:

enabled: false

minReplicas: 1

maxReplicas: 100

targetCPUUtilizationPercentage: 80

nodeSelector: {}

tolerations: []

affinity: {}- values.yaml은 사용자가 값을 설정할 수 있는 파일이다.

- nginx를 포함하고 있고 replica 수와 server type 및 port 등을 담고있다.

service:

type: LoadBalancer

port: 8888- 테스트를 위해서 server type과 port를 변경한다.

Microk8s Helm Chart 설치 코드

$ sudo microk8s helm install 차트명 차트경로Microk8s Helm Chart 설치 결과

$ sudo microk8s helm install foo mychart/

NAME: foo

LAST DEPLOYED: Sun Dec 11 17:09:06 2022

NAMESPACE: default

STATUS: deployed

REVISION: 1

NOTES:

1. Get the application URL by running these commands:

NOTE: It may take a few minutes for the LoadBalancer IP to be available.

You can watch the status of by running 'kubectl get --namespace default svc -w foo-mychart'

export SERVICE_IP=$(kubectl get svc --namespace default foo-mychart --template "{{ range (index .status.loadBalancer.ingress 0) }}{{.}}{{ end }}")

echo http://$SERVICE_IP:8888Microk8s Service 목록 조회 코드

$ sudo microk8s kubectl get svcMicrok8s Service 목록 조회 결과

$ sudo microk8s kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.152.183.1 <none> 443/TCP 6d1h

foo-mychart LoadBalancer 10.152.183.143 <pending> 8888:31152/TCP 2m24sMicrok8s Helm Chart 리스트 조회 코드

$ sudo microk8s helm listMicrok8s Helm Chart 리스트 조회 결과

$ sudo microk8s helm list

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

foo default 1 2022-12-11 17:09:06.745866988 +0000 UTC deployedmychart-0.1.0 1.16.0 Microk8s 특정 네임스페이스의 Helm Chart 리스트 조회 코드

$ sudo microk8s helm list -n 네임스페이스명Microk8s 특정 네임스페이스의 Helm Chart 리스트 조회 결과

$ sudo microk8s helm list -n kube-system

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSIONMicrok8s Helm Chart rendering 코드

$ sudo microk8s helm template 차트경로Microk8s Helm Chart rendering 결과

$ sudo microk8s helm template mychart/

---

# Source: mychart/templates/serviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: release-name-mychart

labels:

helm.sh/chart: mychart-0.1.0

app.kubernetes.io/name: mychart

app.kubernetes.io/instance: release-name

app.kubernetes.io/version: "1.16.0"

app.kubernetes.io/managed-by: Helm

---

# Source: mychart/templates/service.yaml

apiVersion: v1

kind: Service

metadata:

name: release-name-mychart

labels:

helm.sh/chart: mychart-0.1.0

app.kubernetes.io/name: mychart

app.kubernetes.io/instance: release-name

app.kubernetes.io/version: "1.16.0"

app.kubernetes.io/managed-by: Helm

spec:

type: LoadBalancer

ports:

- port: 8888

targetPort: http

protocol: TCP

name: http

selector:

app.kubernetes.io/name: mychart

app.kubernetes.io/instance: release-name

---

# Source: mychart/templates/deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: release-name-mychart

labels:

helm.sh/chart: mychart-0.1.0

app.kubernetes.io/name: mychart

app.kubernetes.io/instance: release-name

app.kubernetes.io/version: "1.16.0"

app.kubernetes.io/managed-by: Helm

spec:

replicas: 1

selector:

matchLabels:

app.kubernetes.io/name: mychart

app.kubernetes.io/instance: release-name

template:

metadata:

labels:

app.kubernetes.io/name: mychart

app.kubernetes.io/instance: release-name

spec:

serviceAccountName: release-name-mychart

securityContext:

{}

containers:

- name: mychart

securityContext:

{}

image: "nginx:1.16.0"

imagePullPolicy: IfNotPresent

ports:

- name: http

containerPort: 80

protocol: TCP

livenessProbe:

httpGet:

path: /

port: http

readinessProbe:

httpGet:

path: /

port: http

resources:

{}

---

# Source: mychart/templates/tests/test-connection.yaml

apiVersion: v1

kind: Pod

metadata:

name: "release-name-mychart-test-connection"

labels:

helm.sh/chart: mychart-0.1.0

app.kubernetes.io/name: mychart

app.kubernetes.io/instance: release-name

app.kubernetes.io/version: "1.16.0"

app.kubernetes.io/managed-by: Helm

annotations:

"helm.sh/hook": test

spec:

containers:

- name: wget

image: busybox

command: ['wget']

args: ['release-name-mychart:8888']

restartPolicy: Never- 해당 명령어는 values.yaml 파일과 templates 안의 템플릿과 파일들의 합쳐진 YAML을 정의한다.

- $ helm template foo ./mychart > output.yaml 다음과 같이 사용하면 추출할 수 있다.

Microk8s Helm 업그레이드 코드

$ sudo microk8s helm upgrade 차트이름 차트경로- 변경 확인을 위해서 사전에 values.yaml에 설정했던 server.type의 값을 Load Balancer에서 NodePort로 변경하였다.

Microk8s Helm 업그레이드 결과

$ sudo microk8s helm upgrade foo mychart/

Release "foo" has been upgraded. Happy Helming!

NAME: foo

LAST DEPLOYED: Sun Dec 11 17:28:51 2022

NAMESPACE: default

STATUS: deployed

REVISION: 2

NOTES:

1. Get the application URL by running these commands:

export NODE_PORT=$(kubectl get --namespace default -o jsonpath="{.spec.ports[0].nodePort}" services foo-mychart)

export NODE_IP=$(kubectl get nodes --namespace default -o jsonpath="{.items[0].status.addresses[0].address}")

echo http://$NODE_IP:$NODE_PORT- 변경 확인을 위해서 사전에 values.yaml에 설정했던 server.type의 값을 Load Balancer에서 NodePort로 변경하였다.

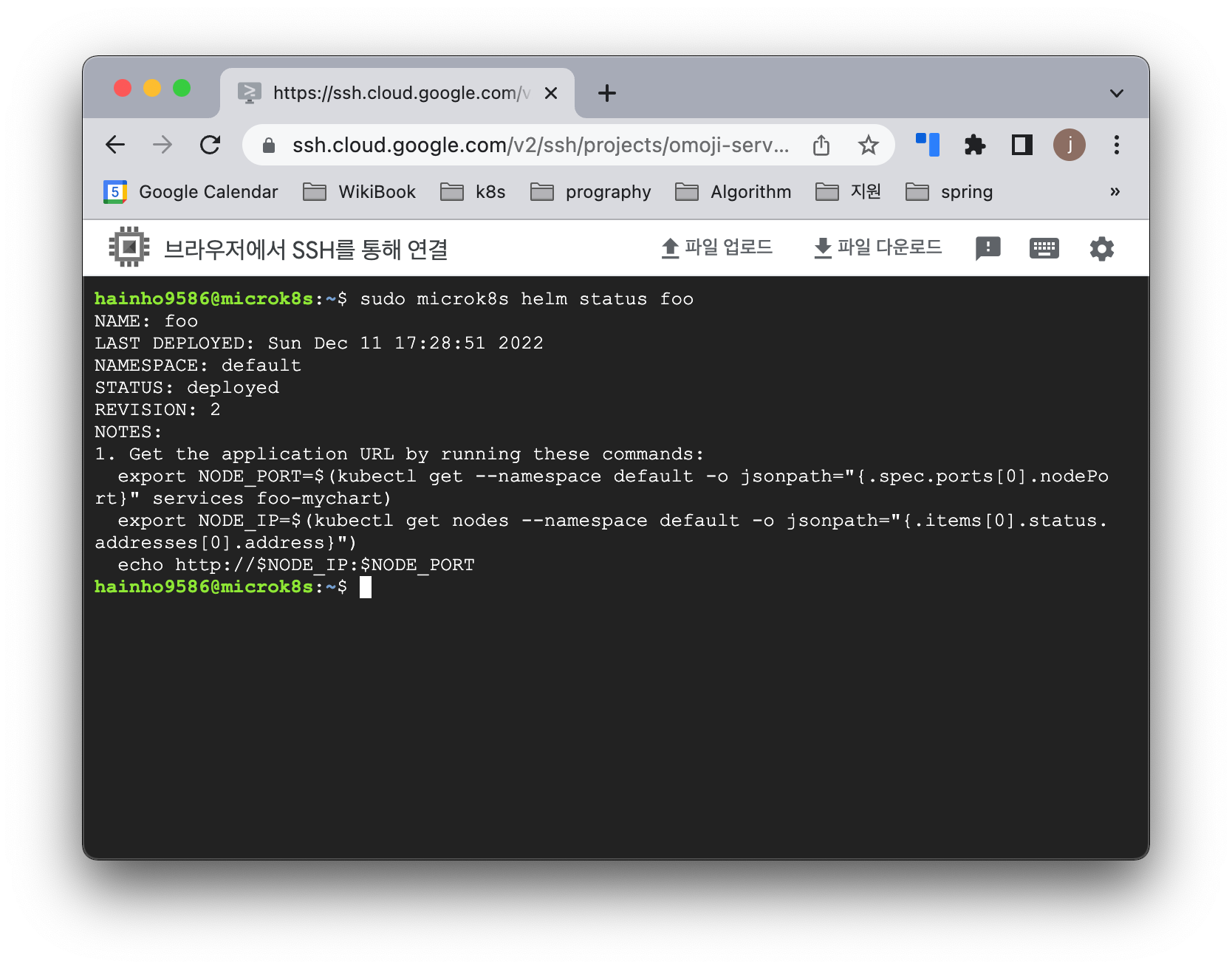

Microk8s Helm Chart 상태 확인 코드

$ sudo microk8s helm status 차트명Microk8s Helm Chart 상태 확인 결과

$ sudo microk8s helm status foo

NAME: foo

LAST DEPLOYED: Sun Dec 11 17:28:51 2022

NAMESPACE: default

STATUS: deployed

REVISION: 2

NOTES:

1. Get the application URL by running these commands:

export NODE_PORT=$(kubectl get --namespace default -o jsonpath="{.spec.ports[0].nodePort}" services foo-mychart)

export NODE_IP=$(kubectl get nodes --namespace default -o jsonpath="{.items[0].status.addresses[0].address}")

echo http://$NODE_IP:$NODE_PORTMicrok8s Helm Chart 제거 코드

$ sudo microk8s helm delete 차트명Microk8s Helm Chart 제거 결과

$ sudo microk8s helm delete foo

release "foo" uninstalledHelm Chart Repository

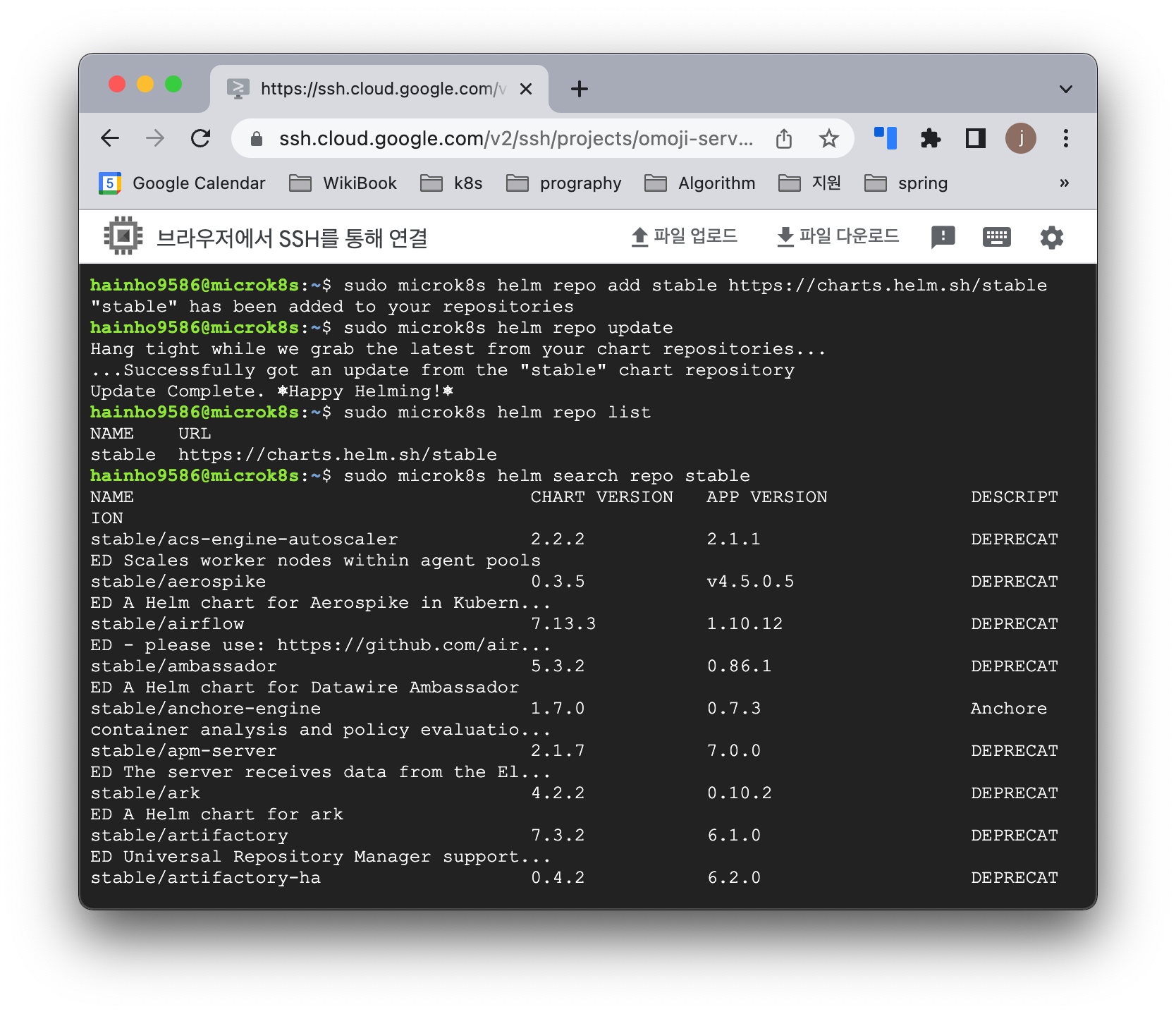

Helm Chart Repository 추가 코드

$ sudo microk8s helm repo add 차트명 차트링크- Docker Hub 처럼 Helm Chart도 Repository를 갖고있다.

Helm Chart Repository 추가 결과

$ sudo microk8s helm repo add stable https://charts.helm.sh/stable

"stable" has been added to your repositoriesHelm Chart Repository 목록 최신화

$ sudo microk8s helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "stable" chart repository

Update Complete. ⎈Happy Helming!⎈- Helm Chart의 목록을 불러올 수 있다.

Helm Chart Repository 추가된 차트 목록 조회

$ sudo microk8s helm repo list

NAME URL

stable https://charts.helm.sh/stable- 저장된 Helm Chart의 목록을 불러올 수 있다.

Helm Chart의 특정 리포를 찾는 코드

$ sudo microk8s helm search repo 차트명- stable 리파지토리에 저장된 chart 리스트를 불러온다.

오류

오류 코드

sudo microk8s kubectl get pods

NAME READY STATUS RESTARTS AGE

hello-node-697897c86-fk6dx 1/1 Running 0 21h

mydeployment 0/1 ImagePullBackOff 0 2m15smydepolyment의 Pod을 살펴보면 Status가 ImagePullBackOff인걸 볼 수 있다.

한마디로 해당 이미지가 없다는 뜻이다.

레퍼런스

- https://ubuntu.com/tutorials/install-a-local-kubernetes-with-microk8s#1-overview

'Study > Devops' 카테고리의 다른 글

| [GCP] Cloud Run과 Cloud Build를 사용하여 Spring Boot 배포하기 (0) | 2022.12.05 |

|---|---|

| [Docker swarm] 활용해보기 with AWS (0) | 2022.11.24 |

| [Continuous Deploy] Github actions .env 파일 관리하기 with Github private repository (1) | 2022.10.14 |

| [Continuous Deploy] Github Action with ECR (0) | 2022.09.18 |

| [Continuous Deploy] AWS CodeDeploy & EC2 with Github Actions (0) | 2022.09.17 |